Tuesday, March 8, 2016

Thursday, March 3, 2016

Validation Activities Related to the SaaS Application

Validation Activities Related to the SaaS Application

Overview

Software-as-a-Service (SaaS) model, which has been defined as the situation wherein a company uses a vendor’s software application from devices through a web browser or program interface. The client does not manage or control the underlying cloud infrastructure; including the network, servers, operating systems, storage, or even individual application capabilities; with the possible exception of application configuration settings.

SaaS and the Life Science Industry

In the life science industry, there may be a number of SaaS products throughout the organization, including customer relationship management systems, human resource information systems, laboratory information management systems, learning management systems, quality management systems, and other areas. For this reason, given that validation of a SaaS application is a shared responsibility between the client and the SaaS vendor at the deployment phase and as the software is periodically upgraded, IT and validation teams must consider new validation approaches to SaaS applications.

With a SaaS application, the vendor’s engineering and IT personnel are responsible for designing and maintaining the internal data center, computer hardware, software, security, disaster recovery, and so on. In this paper, we outline the typical steps performed by the company’s quality assurance (QA), IT, and validation teams as well as the expectations of the SaaS vendor. The shift of certain steps to the vendor makes the vendor audit a critical activity for the client’s validation, auditing, IT, and QA team, so we will share best practices related to that audit as well.

FDA Validation Overview

According to the US Food and Drug Administration, computer system validation is the formalized documented process for testing computer software and systems as required by the Code of Federal Regulations (21 CFR 11.10.a).

To be compliant and reduce the risk of an FDA Warning Letter, life science companies must validate all of their software, databases, spreadsheets, and computer systems and develop the appropriate documentation for all phases of the software development lifecycle (SDLC).

FDA validation guidance and the SDLC describe user site software validation in terms of installation qualification (IQ), in which documentation verifies that the system is installed according to written specifications; operational qualification (OQ), in which documentation verifies that the system operates throughout all operating ranges; and performance qualification (PQ), in which tests must span the underlying current good manufacturing practice (cGMP) business process.

In a similar way, EU Annex 11 also focuses on the product lifecycle and “user requirements” related to each system. However, Annex 11 places more emphasis on “people” and management accountability than FDA regulations—specifically, the individuals with responsibility for the business process and system maintenance.

Validation Activities for SaaS Applications

To address these regulatory obligations, life science companies typically perform a number of activities: creating the solution design document (SDD); developing the user and functional requirements; developing test scripts; and conducting IQ, OQ, and PQ testing, among others. This effort has the potential to dry up the IT and validation teams’ bandwidth, adding many months to the software implementation or enhancement project. In some cases, a company may not have the qualified resources to accomplish the many validation tasks.

One of the appeals of the SaaS application is that a company can shift some of the validation effort to the SaaS vendor. This enables the company’s validation team to initially focus on an audit of the vendor’s data center along with their QA and validation methodology to ensure these activities are performed at the same standard as would be performed by the client’s own QA and validation teams. Typically, the three days or “less/more” spent auditing the SaaS vendor can dramatically reduce the time spent validating the system.

Audit results may be incorporated into a risk assessment to leverage vendor-supplied documentation. In many cases, following an audit of the data center and SDLC methodology, a client’s audit and validation team will then develop the core validation documents:

• Validation Plan: Describes the internal activities that are of part of the overall validation approach to be conducted by the client.

• User Requirements Specification: Provides details on the functionality that the clients demand from the SaaS application. These required items are often categorized as “critical,” “mandatory,” or “nice to have” and are the basis for the validation/testing effort.

• User Acceptance Test Scripts: Clients should have their own test scripts that either augment vendor-owned QA test scripts or unique scripts that demonstrate that the company has tested specific usage of the system.

• Validation of Configurations and Customizations: Clients often test any unique software configurations or customized programming being developed by the vendor for the company.

• Traceability Matrix: Used for mapping user requirements to vendor documentation, UAT test scripts, and internal procedure documents.

• System Governance Procedures: May include system administration and change control for maintaining a validated state during the systems use and operation.

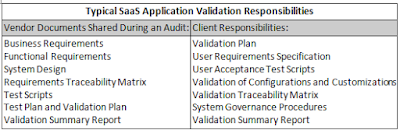

The following table summarizes the typical responsibilities as described above.

Auditing the SaaS Vendor: Best Practices

Clients often conduct an audit of the SaaS vendor at the vendor’s primary location, so the vendor can demonstrate adherence to quality software engineering and testing principles. The client’s validation teams will typically review QA documentation and project files that were developed as part of the design, development, testing, and implementation of the application.

Although qualifying a vendor by audit is not a new practice, as the SaaS model is more widely implemented, the method in which clients qualify the SaaS vendor must evolve to be focused on the software lifecycle process.

The company should ensure that the vendor’s quality procedures demonstrate effective planning, operation, and control of its processes and documentation. The vendor’s QA team will need to present that a valid development methodology is in place and that thorough testing was used to provide the client with confidence and assurance that the application is fit-for-production use. Furthermore, the vendor’s QA team must demonstrate that it is involved with the following activities:

• SDLC stages

• Business rules, graphical user interface design elements, and interoperability with existing features

• Test traceability and full product testing before any software enhancements are released to a production environment.

For example, below is a list of typical validation documents that SaaS vendors will be asked to present during an audit:

• Business Requirements: Focuses on the needs of the user and describes exactly what the system will do.

• Functional Requirements: Focuses on how the system will do what the user is expecting.

• System Design: Focuses on capturing the system design based on the functional requirements. It depicts screen layout, system functions, and other aspects of the user experience to fulfill the business requirements.

• Requirements Traceability Matrix: Captures the relationship between the business requirements and the system requirements, system design, and test scripts that satisfy them; this document should reflect the latest enhancements made to the platform. The SaaS vendor should ensure that documents are tracked through the use of a traceability matrix that maps the business requirements.

• Test Scripts: Used for new enhancements or custom projects. These scripts should cover basic platform functionality, audit trail functionality, reporting tools, and other high priority areas for the client. In addition, custom testing should be executed for any custom programming performed.

• Test Plan and Validation Plan: May be adopted by the vendor as standard operating procedures because the SaaS application is often multi-tenant.

• Validation Summary Report (VSR): This report summarizes vendor testing activities, discrepancies, and other validation activities.

Other key documentation that SaaS vendors may be asked to present during an audit are:

• Product release documentation

• Project management processes

• Corrective and preventive action processes

• Service delivery processes

• Product support/maintenance (including back-up processes)

• Issue tracking processes

• Internal Audits—Vendors should periodically audit their business processes and controls

• Control—Vendors should show control of system documentation and change management

• Security—Security of buildings, hardware, documents etc.

Key Questions to Ask Vendors Related to Electronic Signatures and Audit Trails

When a life science company evaluates SaaS applications, the company should develop a standard vendor questionnaire, which may include questions such as the ones below in these general categories.

Governance/System Access

• Can access to the system be controlled by qualified personnel who will be responsible for the content of the electronic records that are on the system?

• Does the vendor have a 21 CFR Part 11 assessment in place?

• Does the vendor have a distinct qualification documentation process in place, addressing different level of requirements and design

documentation?

• Does the vendor have a structured change approach in place for infrastructure, applications and interfaces, change process coverage (planning, execution, and documentation of changes), change logs, and differently involved roles?

Audit Trails

• Does the SaaS application generate an electronic audit trail for operator entries and actions that create, modify, or delete electronic records?

• Are date and time stamps applied automatically (as opposed to typed in manually by the user)?

• Is the source and format of the system date and time-defined, controlled, unambiguous, and protected from unauthorized changes?

• Do the printed name, date, time, and signature meaning appear in every printable form of the electronic record?

Electronic Signatures

• Will the electronic signature be unique to one individual and not be reused or reassigned to anyone else?

• Is the signed electronic records information shown both electronically and on printed records for the electronic signature?

Documentation

• Does the validation documentation demonstrate that 21 CFR Part 11 requirements have been met and the controls are functioning correctly?

• Is the architecture (including hardware, software, and documentation) easily available to auditors?

• Does the vendor have processes in place for documents to ensure documentation control and to maintain integrity?

• Does the vendor have a change control processes for system documentation?

Summary

Validation of a SaaS application has the potential to improve its time-to-deployment and reduce the validation effort and overall costs for the life science organization. However, the validation team within the organization must expect the same commitment to validation activities from the SaaS vendor. To summarize, here are the key steps that can ensure a successful SaaS application validation project:

1. Audit/assessment of supplier’s capabilities, quality system, SDLC, customer support, and electronic record-keeping controls

2. Development of the user requirement specifications and validation plan

3. Configuration of supplier system to meet user requirements (configuration specification)

4. Vendor testing and release to customer

5. Personnel training

6. User acceptance testing/traceability matrix

7. Develop system administration, operation, and maintenance SOPs

8. Validation summary report and release of system for production use.

Monday, December 21, 2015

Web Server and ASP.NET Application Life Cycle in Depth

Web Server and ASP.NET Application Life Cycle in Depth

Introduction

In this article, we will try to understand what happens when a user submits a request to an ASP.NET web app. There are lots of articles that explain this topic, but none that shows in a clear way what really happens in depth during the request. After reading this article, you will be able to understand:

• What is a Web Server

• HTTP - TCP/IP protocol

• IIS

• Web communication

• Application Manager

• Hosting environment

• Application Domain

• Application Pool

• How many app domains are created against a client request

• How many HttpApplications are created against a request and how I can affect this behaviour

• What is the work processor and how many of it are running against a request

• What happens in depth between a request and a response

Start from scratch

All the articles I have read usually begins with "The user sends a request to IIS... bla bla bla". Everyone knows that IIS is a web server where we host our web applications (and much more), but what is a web server?

Let start from the real beginning.

A Web Server (like Internet Information Server/Apache/etc.) is a piece of software that enables a website to be viewed using HTTP. We can abstract this concept by saying that a web server is a piece of software that allows resources (web pages, images, etc.) to be requested over the HTTP protocol. I am sure many of you have thought that a web server is just a special super computer, but it's just the software that runs on it that makes the difference between a normal computer and a web server.

As everyone knows, in a Web Communication, there are two main actors: the Client and the Server.

The client and the server, of course, need a connection to be able to communicate with each other, and a common set of rules to be able to understand each other. The rules they need to communicate are called protocols. Conceptually, when we speak to someone, we are using a protocol. The protocols in human communication are rules about appearance, speaking, listening, and understanding. These rules, also called protocols of conversation, represent different layers of communication. They work together to help people communicate successfully. The need for protocols also applies to computing systems. A communications protocol is a formal description of digital message formats and the rules for exchanging those messages in or between computing systems and in telecommunications.

HTTP knows all the "grammar", but it doesn't know anything about how to send a message or open a connection. That's why HTTP is built on top of TCP/IP. Below, you can see the conceptual model of this protocol on top of the HTTP protocol:

TCP/IP is in charge of managing the connection and all the low level operations needed to deliver the message exchanged between the client and the server.

In this article, I won't explain how TCP/IP works, because I should write a whole article on it, but it's good to know it is the engine that allows the client and the server to have message exchanges.

HTTP is a connectionless protocol, but it doesn't mean that the client and the server don't need to establish a connection before they start to communicate with each other. But, it means that the client and the server don't need to have any prearrangement before they start to communicate.

Connectionless means the client doesn't care if the server is ready to accept a request, and on the other hand, the server doesn't care if the client is ready to get the response, but a connection is still needed.

In connection-oriented communication, the communicating peers must first establish a logical or physical data channel or connection in a dialog preceding the exchange of user data.

Now, let's see what happens when a user puts an address into the browser address bar.

• The browser breaks the URL into three parts:

o The protocol ("HTTP")

o The server name (www.Pelusoft.co.uk)

o The file name (index.html)

• The browser communicates with a name server to translate the server name "www.Pelusoft.co.uk" into an IP address, which it uses to connect to the server machine.

• The browser then forms a connection to the server at that IP address on port 80. (We'll discuss ports later in this article.)

• Following the HTTP protocol, the browser sents a GET request to the server, asking for the file "http://www.pelusoft.co.uk.com/index.htm". (Note that cookies may be sent from the browser to the server with the GET request -- see How Internet Cookies Work for details.)

• The server then sents the HTML text for the web page to the browser. Cookies may also be sent from the server to the browser in the header for the page.)

• The browser reads the HTML tags and formats the page onto your screen.

The current practice requires that the connection be established by the client prior to each request, and closed by the server after sending the response. Both clients and servers should be aware that either party may close the connection prematurely, due to user action, automated time-out, or program failure, and should handle such closing in a predictable fashion. In any case, the closing of the connection by either or both parties always terminates the current request, regardless of its status.

At this point, you should have an idea about how the HTTP - TCP/IP protocol works. Of course, there is a lot more to say, but the scope of this article is just a very high view of these protocols just to better understand all the steps that occur since the user starts to browse a web site.

Now it's time to go ahead, moving the focus on to what happens when the web server receives the request and how it can get the request itself.

As I showed earlier, a web server is a "normal computer" that is running a special software that makes it a Web Server. Let's suppose that IIS runs on our web server. From a very high view, IIS is just a process which is listening on a particular port (usually 80). Listening means it is ready to accept a connections from clients on port 80. A very important thing to remember is: IIS is not ASP.NET. This means that IIS doesn't know anything about ASP.NET; it can work by itself. We can have a web server that is hosting just HTML pages or images or any other kind of web resource. The web server, as I explained earlier, has to just return the resource the browser is asking for.

ASP.NET and IIS

The web server can also support server scripting (as ASP.NET). What I show in this paragraph is what happens on the server running ASP.NET and how IIS can "talk" with the ASP.NET engine. When we install ASP.NET on a server, the installation updates the script map of an application to the corresponding ISAPI extensions to process the request given to IIS. For example, the "aspx" extension will be mapped to aspnet_isapi.dll and hence requests for an aspx page to IIS will be given to aspnet_isapi (the ASP.NET registration can also be done using Aspnet_regiis). The script map is shown below:

The ISAPI filter is a plug-in that can access an HTTP data stream before IIS gets to see it. Without the ISAPI filter, IIS cannot redirect a request to the ASP.NET engine (in the case of a .aspx page). From a very high point of view, we can think of the ISAPI filter as a router for IIS requests: every time there is a resource requested whose file extension is present on the map table (the one shown earlier), it redirect the request to the right place. In the case of an .aspx page, it redirects the request to the .NET runtime that knows how to elaborate the request. Now, let's see how it works.

When a request comes in:

• IIS creates/runs the work processor (w3wp.exe) if it is not running.

• The aspnet_isapi.dll is hosted in the w3wp.exe process. IIS checks for the script map and routes the request to aspnet_isapi.dll.

• The request is passed to the .NET runtime that is hosted into w3wp.exe as well.

Finally, the request gets into the runtime

This paragraph focuses on how the runtime handles the request and shows all the objects involved in the process.

First of all, let's have a look at what happens when the request gets to the runtime.

• When ASP.NET receives the first request for any resource in an application, a class namedApplicationManager creates an application domain. (Application domains provide isolation between applications for global variables, and allow each application to be unloaded separately.)

• Within an application domain, an instance of the class named Hosting Environment is created, which provides access to information about the application such as the name of the folder where the application is stored.

• After the application domain has been created and the Hosting Environment object instantiated, ASP.NET creates and initializes core objects such as HttpContext, HttpRequest, and HttpResponse.

• After all core application objects have been initialized, the application is started by creating an instance of the HttpApplication class.

• If the application has a Global.asax file, ASP.NET instead creates an instance of the Global.asax class that is derived from the HttpApplication class and uses the derived class to represent the application.

Those are the first steps that happens against a client request. Most articles don't say anything about these steps. In this article, we will analyze in depth what happens at each step.

Below, you can see all the steps the request has to pass though before it is elaborated.

Application Manager

The first object we have to talk about is the Application Manager.

Application Manager is actually an object that sits on top of all running ASP.NET AppDomains, and can do things like shut them all down or check for idle status.

For example, when you change the configuration file of your web application, the Application Manager is in charge to restart the AppDomain to allow all the running application instances (your web site instance) to be created again for loading the new configuration file you may have changed.

Requests that are already in the pipeline processing will continue to run through the existing pipeline, while any new request coming in gets routed to the new AppDomain. To avoid the problem of "hung requests", ASP.NET forcefully shuts down the AppDomain after the request timeout period is up, even if requests are still pending.

Application Manager is the "manager", but the Hosting Environment contains all the "logic" to manage the application instances. It's like when you have a class that uses an interface: within the class methods, you just call the interface method. In this case, the methods are called within the Application Manager, but are executed in the Hosting Environment (let's suppose the Hosting Environment is the class that implements the interface).

At this point, you should have a question: how is it possible the Application Manager can communicate with the Hosting Environment since it lives in an AppDomain? (We said the AppDomain creates a kind of boundary around the application to isolate the application itself.) In fact, the Hosting Environment has to inherit from theMarshalByRefObject class to use Remoting to communicate with the Application Manager. The Application Manager creates a remote object (the Hosting Environment) and calls methods on it.

So we can say the Hosting Environment is the "remote interface" that is used by the Application Manager, but the code is "executed" within the Hosting Environment object.

HttpApplication

On the previous paragraph, I used the term "Application" a lot. HttpApplication is an instance of your web application. It's the object in charge to "elaborate" the request and return the response that has to be sent back to the client. An HttpApplication can elaborate only one request at a time. However, to maximize performance, HttpApplication instances might be reused for multiple requests, but it always executes one request at a time.

This simplifies application event handling because you do not need to lock non-static members in the application class when you access them. This also allows you to store request-specific data in non-static members of the application class. For example, you can define a property in the Global.asax file and assign it a request-specific value

You can't manually create an instance of HttpApplication; it is the Application Manager that is in charge to do that. You can only configure what is the maximum number of HttpApplications you want to be created by the Application Manager. There are a bunch of keys in the machine config that can affect the Application Manager behaviour:

processModel enable="true|false"

timeout="hrs:mins:secs|Infinite"

idleTimeout="hrs:mins:secs|Infinite"

shutdownTimeout="hrs:mins:secs|Infinite"

requestLimit="num|Infinite"

requestQueueLimit="num|Infinite"

restartQueueLimit="num|Infinite"

memoryLimit="percent"

webGarden="true|false"

cpuMask="num"

userName=""

password=""

logLevel="All|None|Errors"

clientConnectedCheck="hrs:mins:secs|Infinite"

comAuthenticationLevel="Default|None|Connect|Call|

Pkt|PktIntegrity|PktPrivacy"

comImpersonationLevel="Default|Anonymous|Identify|

Impersonate|Delegate"

responseDeadlockInterval="hrs:mins:secs|Infinite"

responseRestartDeadlockInterval="hrs:mins:secs|Infinite"

autoConfig="true|false"

maxWorkerThreads="num"

maxIoThreads="num"

minWorkerThreads="num"

minIoThreads="num"

serverErrorMessageFile=""

pingFrequency="Infinite"

pingTimeout="Infinite"

maxAppDomains="2000"

With maxWorkerThreads and minWorkerThreads, you set up the minimum and maximum number ofHttpApplications.

For more information, have a look at: ProcessModel Element.

Just to clarify what we have said until now, we can say that against a request to a WebApplication, we have:

• A Worker Process w3wp.exe is started (if it is not running).

• An instance of ApplicationManager is created.

• An ApplicationPool is created.

• An instance of a Hosting Environment is created.

• A pool of HttpAplication instances is created (defined with the machine.config).

Until now, we talked about just one WebApplication, let's say WebSite1, under IIS. What happens if we create another application under IIS for WebSite2?

• We will have the same process explained above.

• WebSite2 will be executed within the existing Worker Process w3wp.exe (where WebSite1 is running).

• The same Application Manager instance will manage WebSite2 as well. There is always an instance per Work Proces w3wp.exe.

• WebSite2 will have its own AppDomain and Hosting Environment.

It's very important to notice that each web application runs in a separate AppDomain so that if one fails or does something wrong, it won't affect the other web apps that can carry on their work. At this point, we should have another question:

What would happen if a Web Application, let's say WebSite1, does something wrong affecting the Worker Process (even if it's quite difficult)?

What if I want to recycle the application domain?

To summarize what we have said, an AppPool consists of one or more processes. Each web application that you are running consists of (usually, IIRC) an Application Domain. The issue is when you assign multiple web applications to the same AppPool, while they are separated by the Application Domain boundary, they are still in the same process (w3wp.exe). This can be less reliable/secure than using a separate AppPool for each web application. On the other hand, it can improve performance by reducing the overhead of multiple processes.

An Internet Information Services (IIS) application pool is a grouping of URLs that is routed to one or more worker processes. Because application pools define a set of web applications that share one or more worker processes, they provide a convenient way to administer a set of web sites and applications and their corresponding worker processes. Process boundaries separate each worker process; therefore, a web site or application in an application pool will not be affected by application problems in other application pools. Application pools significantly increase both the reliability and manageability of a web infrastructure.

Introduction

In this article, we will try to understand what happens when a user submits a request to an ASP.NET web app. There are lots of articles that explain this topic, but none that shows in a clear way what really happens in depth during the request. After reading this article, you will be able to understand:

• What is a Web Server

• HTTP - TCP/IP protocol

• IIS

• Web communication

• Application Manager

• Hosting environment

• Application Domain

• Application Pool

• How many app domains are created against a client request

• How many HttpApplications are created against a request and how I can affect this behaviour

• What is the work processor and how many of it are running against a request

• What happens in depth between a request and a response

Start from scratch

All the articles I have read usually begins with "The user sends a request to IIS... bla bla bla". Everyone knows that IIS is a web server where we host our web applications (and much more), but what is a web server?

Let start from the real beginning.

A Web Server (like Internet Information Server/Apache/etc.) is a piece of software that enables a website to be viewed using HTTP. We can abstract this concept by saying that a web server is a piece of software that allows resources (web pages, images, etc.) to be requested over the HTTP protocol. I am sure many of you have thought that a web server is just a special super computer, but it's just the software that runs on it that makes the difference between a normal computer and a web server.

As everyone knows, in a Web Communication, there are two main actors: the Client and the Server.

The client and the server, of course, need a connection to be able to communicate with each other, and a common set of rules to be able to understand each other. The rules they need to communicate are called protocols. Conceptually, when we speak to someone, we are using a protocol. The protocols in human communication are rules about appearance, speaking, listening, and understanding. These rules, also called protocols of conversation, represent different layers of communication. They work together to help people communicate successfully. The need for protocols also applies to computing systems. A communications protocol is a formal description of digital message formats and the rules for exchanging those messages in or between computing systems and in telecommunications.

HTTP knows all the "grammar", but it doesn't know anything about how to send a message or open a connection. That's why HTTP is built on top of TCP/IP. Below, you can see the conceptual model of this protocol on top of the HTTP protocol:

TCP/IP is in charge of managing the connection and all the low level operations needed to deliver the message exchanged between the client and the server.

In this article, I won't explain how TCP/IP works, because I should write a whole article on it, but it's good to know it is the engine that allows the client and the server to have message exchanges.

HTTP is a connectionless protocol, but it doesn't mean that the client and the server don't need to establish a connection before they start to communicate with each other. But, it means that the client and the server don't need to have any prearrangement before they start to communicate.

Connectionless means the client doesn't care if the server is ready to accept a request, and on the other hand, the server doesn't care if the client is ready to get the response, but a connection is still needed.

In connection-oriented communication, the communicating peers must first establish a logical or physical data channel or connection in a dialog preceding the exchange of user data.

Now, let's see what happens when a user puts an address into the browser address bar.

• The browser breaks the URL into three parts:

o The protocol ("HTTP")

o The server name (www.Pelusoft.co.uk)

o The file name (index.html)

• The browser communicates with a name server to translate the server name "www.Pelusoft.co.uk" into an IP address, which it uses to connect to the server machine.

• The browser then forms a connection to the server at that IP address on port 80. (We'll discuss ports later in this article.)

• Following the HTTP protocol, the browser sents a GET request to the server, asking for the file "http://www.pelusoft.co.uk.com/index.htm". (Note that cookies may be sent from the browser to the server with the GET request -- see How Internet Cookies Work for details.)

• The server then sents the HTML text for the web page to the browser. Cookies may also be sent from the server to the browser in the header for the page.)

• The browser reads the HTML tags and formats the page onto your screen.

The current practice requires that the connection be established by the client prior to each request, and closed by the server after sending the response. Both clients and servers should be aware that either party may close the connection prematurely, due to user action, automated time-out, or program failure, and should handle such closing in a predictable fashion. In any case, the closing of the connection by either or both parties always terminates the current request, regardless of its status.

At this point, you should have an idea about how the HTTP - TCP/IP protocol works. Of course, there is a lot more to say, but the scope of this article is just a very high view of these protocols just to better understand all the steps that occur since the user starts to browse a web site.

Now it's time to go ahead, moving the focus on to what happens when the web server receives the request and how it can get the request itself.

As I showed earlier, a web server is a "normal computer" that is running a special software that makes it a Web Server. Let's suppose that IIS runs on our web server. From a very high view, IIS is just a process which is listening on a particular port (usually 80). Listening means it is ready to accept a connections from clients on port 80. A very important thing to remember is: IIS is not ASP.NET. This means that IIS doesn't know anything about ASP.NET; it can work by itself. We can have a web server that is hosting just HTML pages or images or any other kind of web resource. The web server, as I explained earlier, has to just return the resource the browser is asking for.

ASP.NET and IIS

The web server can also support server scripting (as ASP.NET). What I show in this paragraph is what happens on the server running ASP.NET and how IIS can "talk" with the ASP.NET engine. When we install ASP.NET on a server, the installation updates the script map of an application to the corresponding ISAPI extensions to process the request given to IIS. For example, the "aspx" extension will be mapped to aspnet_isapi.dll and hence requests for an aspx page to IIS will be given to aspnet_isapi (the ASP.NET registration can also be done using Aspnet_regiis). The script map is shown below:

The ISAPI filter is a plug-in that can access an HTTP data stream before IIS gets to see it. Without the ISAPI filter, IIS cannot redirect a request to the ASP.NET engine (in the case of a .aspx page). From a very high point of view, we can think of the ISAPI filter as a router for IIS requests: every time there is a resource requested whose file extension is present on the map table (the one shown earlier), it redirect the request to the right place. In the case of an .aspx page, it redirects the request to the .NET runtime that knows how to elaborate the request. Now, let's see how it works.

When a request comes in:

• IIS creates/runs the work processor (w3wp.exe) if it is not running.

• The aspnet_isapi.dll is hosted in the w3wp.exe process. IIS checks for the script map and routes the request to aspnet_isapi.dll.

• The request is passed to the .NET runtime that is hosted into w3wp.exe as well.

Finally, the request gets into the runtime

This paragraph focuses on how the runtime handles the request and shows all the objects involved in the process.

First of all, let's have a look at what happens when the request gets to the runtime.

• When ASP.NET receives the first request for any resource in an application, a class namedApplicationManager creates an application domain. (Application domains provide isolation between applications for global variables, and allow each application to be unloaded separately.)

• Within an application domain, an instance of the class named Hosting Environment is created, which provides access to information about the application such as the name of the folder where the application is stored.

• After the application domain has been created and the Hosting Environment object instantiated, ASP.NET creates and initializes core objects such as HttpContext, HttpRequest, and HttpResponse.

• After all core application objects have been initialized, the application is started by creating an instance of the HttpApplication class.

• If the application has a Global.asax file, ASP.NET instead creates an instance of the Global.asax class that is derived from the HttpApplication class and uses the derived class to represent the application.

Those are the first steps that happens against a client request. Most articles don't say anything about these steps. In this article, we will analyze in depth what happens at each step.

Below, you can see all the steps the request has to pass though before it is elaborated.

Application Manager

The first object we have to talk about is the Application Manager.

Application Manager is actually an object that sits on top of all running ASP.NET AppDomains, and can do things like shut them all down or check for idle status.

For example, when you change the configuration file of your web application, the Application Manager is in charge to restart the AppDomain to allow all the running application instances (your web site instance) to be created again for loading the new configuration file you may have changed.

Requests that are already in the pipeline processing will continue to run through the existing pipeline, while any new request coming in gets routed to the new AppDomain. To avoid the problem of "hung requests", ASP.NET forcefully shuts down the AppDomain after the request timeout period is up, even if requests are still pending.

Application Manager is the "manager", but the Hosting Environment contains all the "logic" to manage the application instances. It's like when you have a class that uses an interface: within the class methods, you just call the interface method. In this case, the methods are called within the Application Manager, but are executed in the Hosting Environment (let's suppose the Hosting Environment is the class that implements the interface).

At this point, you should have a question: how is it possible the Application Manager can communicate with the Hosting Environment since it lives in an AppDomain? (We said the AppDomain creates a kind of boundary around the application to isolate the application itself.) In fact, the Hosting Environment has to inherit from theMarshalByRefObject class to use Remoting to communicate with the Application Manager. The Application Manager creates a remote object (the Hosting Environment) and calls methods on it.

So we can say the Hosting Environment is the "remote interface" that is used by the Application Manager, but the code is "executed" within the Hosting Environment object.

HttpApplication

On the previous paragraph, I used the term "Application" a lot. HttpApplication is an instance of your web application. It's the object in charge to "elaborate" the request and return the response that has to be sent back to the client. An HttpApplication can elaborate only one request at a time. However, to maximize performance, HttpApplication instances might be reused for multiple requests, but it always executes one request at a time.

This simplifies application event handling because you do not need to lock non-static members in the application class when you access them. This also allows you to store request-specific data in non-static members of the application class. For example, you can define a property in the Global.asax file and assign it a request-specific value

You can't manually create an instance of HttpApplication; it is the Application Manager that is in charge to do that. You can only configure what is the maximum number of HttpApplications you want to be created by the Application Manager. There are a bunch of keys in the machine config that can affect the Application Manager behaviour:

processModel enable="true|false"

timeout="hrs:mins:secs|Infinite"

idleTimeout="hrs:mins:secs|Infinite"

shutdownTimeout="hrs:mins:secs|Infinite"

requestLimit="num|Infinite"

requestQueueLimit="num|Infinite"

restartQueueLimit="num|Infinite"

memoryLimit="percent"

webGarden="true|false"

cpuMask="num"

userName="

password="

logLevel="All|None|Errors"

clientConnectedCheck="hrs:mins:secs|Infinite"

comAuthenticationLevel="Default|None|Connect|Call|

Pkt|PktIntegrity|PktPrivacy"

comImpersonationLevel="Default|Anonymous|Identify|

Impersonate|Delegate"

responseDeadlockInterval="hrs:mins:secs|Infinite"

responseRestartDeadlockInterval="hrs:mins:secs|Infinite"

autoConfig="true|false"

maxWorkerThreads="num"

maxIoThreads="num"

minWorkerThreads="num"

minIoThreads="num"

serverErrorMessageFile=""

pingFrequency="Infinite"

pingTimeout="Infinite"

maxAppDomains="2000"

With maxWorkerThreads and minWorkerThreads, you set up the minimum and maximum number ofHttpApplications.

For more information, have a look at: ProcessModel Element.

Just to clarify what we have said until now, we can say that against a request to a WebApplication, we have:

• A Worker Process w3wp.exe is started (if it is not running).

• An instance of ApplicationManager is created.

• An ApplicationPool is created.

• An instance of a Hosting Environment is created.

• A pool of HttpAplication instances is created (defined with the machine.config).

Until now, we talked about just one WebApplication, let's say WebSite1, under IIS. What happens if we create another application under IIS for WebSite2?

• We will have the same process explained above.

• WebSite2 will be executed within the existing Worker Process w3wp.exe (where WebSite1 is running).

• The same Application Manager instance will manage WebSite2 as well. There is always an instance per Work Proces w3wp.exe.

• WebSite2 will have its own AppDomain and Hosting Environment.

It's very important to notice that each web application runs in a separate AppDomain so that if one fails or does something wrong, it won't affect the other web apps that can carry on their work. At this point, we should have another question:

What would happen if a Web Application, let's say WebSite1, does something wrong affecting the Worker Process (even if it's quite difficult)?

What if I want to recycle the application domain?

To summarize what we have said, an AppPool consists of one or more processes. Each web application that you are running consists of (usually, IIRC) an Application Domain. The issue is when you assign multiple web applications to the same AppPool, while they are separated by the Application Domain boundary, they are still in the same process (w3wp.exe). This can be less reliable/secure than using a separate AppPool for each web application. On the other hand, it can improve performance by reducing the overhead of multiple processes.

An Internet Information Services (IIS) application pool is a grouping of URLs that is routed to one or more worker processes. Because application pools define a set of web applications that share one or more worker processes, they provide a convenient way to administer a set of web sites and applications and their corresponding worker processes. Process boundaries separate each worker process; therefore, a web site or application in an application pool will not be affected by application problems in other application pools. Application pools significantly increase both the reliability and manageability of a web infrastructure.

Thursday, October 8, 2015

Change Request Management

Change Request Management

Introduction

If project success means completing the project on time, within budget and with the originally agreed upon features and functionality,few software projects are rated successful.

Statement of Problem

In any enterprise software project, managing the changes in requirements is a very difficult task and it could become chaotic. If it is not properly managed, the consequences could be very costly to the project and it could ultimately result in the project’s failure.

Some Reasons for Failure

Poor requirements management: We forge ahead with development without user input and a clear understanding of the problems we attempt to solve.

Inadequate change management: Changes are inevitable; yet we rarely track them or understand their impact.

Poor resource allocation: Resource allocation is not re-negotiated consistently with the accepted Change Requests.

Changing Requirements

Software requirements are subjected to continuous changes for bad and good reasons. The real problem however, is not that software requirements change during the life of a project, but that they usually change out of a framework of disciplined planning and control processes. If adequately managed, Change Requests (CR) may represent precious opportunities to achieve a better customer satisfaction and profitability. If not managed, instead, CR represents threats for the project success.

Change Request Management (CRM)

CRM addresses the organizational infrastructure required to assess the cost, and schedule, impact of a requested change to the existing product. Change Request Management addresses the workings of a Change Review Team or Change Control Board.

Change Request

A Change Request (CR) is a formally submitted artifact that is used to track all stakeholder requests (including new features, enhancement requests, defects, changed requirements, etc.) along with related status information throughout the project lifecycle.

Change Tracking

Change Tracking describes what is done to components for what reason and at what time. It serves as history and rationale of changes. It is quite separate from assessing the impact of proposed changes as described under 'Change Request Management'.

Change or Configuration Control Board (CCB)

CCB is the board that oversees the change process consisting of representatives from all interested parties, including customers, developers, and users. In a small project, a single team member, such as the project manager or software architect, may play this role.

CCB Review Meeting

The function of this meeting is to review Submitted Change Requests. An initial review of the contents of the Change Request is done in the meeting to determine if it is a valid request. If so, then a determination is made if the change is in or out of scope for the current release(s), based on priority, schedule, resources, level-of-effort, risk, severity and any other relevant criteria as determined by the group.

Why control change across the life cycle?

“Uncontrollable change is a common source of project chaos, schedule slips and quality problems.”

Impact analysis

Impact analysis provides accurate understanding of the implications of a proposed change, helping you make informed business decisions about which proposals to approve. The analysis examines the context of the proposed change to identify existing components that might have to be modified or discarded, identify new work products to be created, and estimate the effort associated with each task.”

Traceability

Traceability provides a methodical and controlled process for managing the changes that inevitably occur during application development. Without tracing, every change would require reviewing documents on an ad-hoc basis to see if any other elements of the project need updating.

Establishing a Change Control Process

The following activities are required to establish CRM:

Establish the Change Request Process

Establish the Change Control Board

Define Change Review Notification Protocols

Wednesday, July 29, 2015

10 things to be a GooD TesteR

So, here you go. Please prepend the condition “you are good at testing when” to each point and read through:

1. You understand priorities:

Software tester unknowingly becomes good time manager as the first thing he needs to understand is priority. Most of the time, you are given a module/functionality to test and timeline (which is always tight) and you need to give output. These regular challenges make you understand how to prioritize the things.

As a tester, you need to understand what should be tested and what should be given less priority, what should be automated and what should be tested manually, which task should be taken up first and what can be done at last moment. Once you are master of defining priorities, software testing would be really easy. But…….but my friend, understanding priority only comes with experience and so patience and an alert eye are the most helpful weapons.

2. You ask questions:

Asking questions is the most important part of software testing. If you fail at it, you are going to lose important bunch of information.

Questions can be asked:

• To understand requirement

• To understand changes done

• To understand how requirement has been implemented

• To understand how the bug fixed

• To understand bug fix effects

• To understand the product from other perspectives like development, business etc.

Can be beneficial to understand the overall picture and to define the coverage.

3. You can create numbers of ideas:

When you can generate numbers of ideas to test the product, you stand out of crowd as most of the time people feel self-satisfaction after writing ordinary functional and performance test cases.

As per me, a real tester’s job starts only after writing ordinary test cases. The more you think about how the product can be used in different ways, you will be able to generate ideas to test it and ultimately you will gain confidence in product, customer satisfaction and life long experience.

So, be an idea generator if you want to be good at testing.

4. You can analyze data:

Being a tester, you are not expected to do testing only. You need to understand the data collected from testing and need to analyze them for particular behaviour of application or product. Most of the time, we hear about non-reproducible bug, There is no bug that is non-reproducible. If it occurred once that means it’s going to pop out for the second time. But to reach out to the root cause, you need to analyze the test environment, the test data, the interruptions etc.

Also, as we all know, when it comes to automation testing, most of the time it’s about Analyzing test results because creating scripts and executing them for numerous time is not a big task but Analyzing the data generated after execution of those scripts, is the most important part.

5. You can report negative things in positive way:

A tester needs to learn tactics to deal with everyone around and needs to be good at communication. No one feels good when he/she is being told that whatever they did was completely or partially wrong. But it makes a whole lot of difference in reaction when you suggest doing something or rectifying something with better ideas and without egoistic voice.

Also details are important so provide details about what negative you saw and how it can affect the product/application overall.

No one would deny rectifying it.

6. You are good at reporting:

For the whole day you worked and worked and executed numbers of test cases and marked them as pass/fail in “test management tool”. What would be your status at the end of day? No one would be interested in knowing how many numbers of test cases you executed. People want short and sweet description about your whole day task.

So now onwards, write your “status report” as – what you did (at max 3 sentences), what you found (with bug numbers) and what you will do next.

7. You are flexible to support whenever it’s required:

Duty of software tester does not end after reporting bug. If the developer is not able to reproduce the bug, you are expected to support to reproduce it because then only the developer will be able to fix it.

Also tight timelines for software testing makes many testers ignorant for quality. The right approach should be proper planning and an extra effort to cover whatever is required.

8. You are able co-relate real time scenarios to software testing:

When you are able to co-relate testing with real life, it’s easy. Habituate yourself to think or constantly create test cases about how to test a pen, how to test a headphone, how to test a monument and see how it helps in near future. It will help your mind to constantly generate ideas and relate testing with practical things.

9. You are a constant learner:

Software testing is challenging because you need to learn new things constantly. It’s not about gaining expertise of specific scripting language; it’s about keeping up with latest technology, about learning automation tools, about learning to create ideas, about learning from experience and ultimately about constantly thriving.

10. You can wear end user’s shoes:

You are a good tester only when you can understand your customer. Customer is King and you need to understand his/her needs. If the product does not satisfy customer needs, no matter how useful it is, it is not going to work. And it is a tester’s responsibility to understand the customer.

Friday, June 26, 2015

21 CFR 11.10(k): Document Control

21 CFR 11.10(k): Document Control

Organizations that use FDA regulated computer systems must have a document control system. This document control system must include provisions for document approval, revision, and storage. They must also have defined procedures to use and administer the computer system.

Text of 21 CFR 11.10(k)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(k) Use of appropriate controls over systems documentation including:

(1) Adequate controls over the distribution of, access to, and use of documentation for system operation and maintenance.

(2) Revision and change control procedures to maintain an audit trail that documents time-sequenced development and modification of systems documentation.

Interpretation

Document control is required for any documentation for this system, including SOPs and validation documents. This may be accomplished through a company’s existing document control procedures. There should be change control procedures that cover changes in system documentation. This may be covered by company document control procedures.

Implementation

An organization needs policies for creating compliant documentation and making changes to that documentation. All expired versions of SOPs or other compliant documentation should be retained for future regulatory review. All computer systems require a procedure describing the operation, maintenance, security, and administration for the system.

If you need more information or assistance with training on document control or assessing your document control system, please contact us to arrange consultation services.

Frequently Asked Questions

Q: Does every computer system require an operation and use procedure?

A: The use of every compliant computer system should be proceduralized. This can be documented in a system-specific SOP, or it can be documented with the associated procedure where the computer system is used.

21 CFR 11.10(j): Policies for Using Electronic Signatures

21 CFR 11.10(j): Policies for Using Electronic Signatures

If an FDA regulated computer system uses electronic signatures, the organization must have procedures which define practices for using electronic signatures within the organization.

Text of 21 CFR 11.10(j)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(j) The establishment of, and adherence to, written policies that hold individuals accountable and responsible for actions initiated under their electronic signatures, in order to deter record and signature falsification.

Interpretation

There should be policies that clearly state that the electronic signing is the same as a person’s handwritten signature and that all responsibilities that apply to handwritten signatures also apply to electronic signatures.

Implementation

An organization requires a clear policy on the use of electronic signatures, including a statement signed by all employees who will use electronic signatures that they understand an electronic signature is legally equivalent to a hand-written signature.

If you need more information or assistance with training on policies for using electronic signatures or assessing policies for using electronic signatures, please contact us to arrange consultation services.

Compare this requirement with Annex 11 Section 14., Electronic Signatures.

Frequently Asked Questions

Q: Are all employees required to sign our policy on the use of electronic signatures?

A: Only employees who will use a computer system with electronic signatures are required to be trained on the use of electronic signatures.

If an FDA regulated computer system uses electronic signatures, the organization must have procedures which define practices for using electronic signatures within the organization.

Text of 21 CFR 11.10(j)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(j) The establishment of, and adherence to, written policies that hold individuals accountable and responsible for actions initiated under their electronic signatures, in order to deter record and signature falsification.

Interpretation

There should be policies that clearly state that the electronic signing is the same as a person’s handwritten signature and that all responsibilities that apply to handwritten signatures also apply to electronic signatures.

Implementation

An organization requires a clear policy on the use of electronic signatures, including a statement signed by all employees who will use electronic signatures that they understand an electronic signature is legally equivalent to a hand-written signature.

If you need more information or assistance with training on policies for using electronic signatures or assessing policies for using electronic signatures, please contact us to arrange consultation services.

Compare this requirement with Annex 11 Section 14., Electronic Signatures.

Frequently Asked Questions

Q: Are all employees required to sign our policy on the use of electronic signatures?

A: Only employees who will use a computer system with electronic signatures are required to be trained on the use of electronic signatures.

21 CFR 11.10(i): Education, Training and Experience

21 CFR 11.10(i): Education, Training and Experience

Individuals who use FDA regulated computer systems should have the appropriate education, training, or experience to operate the system.

Text of 21 CFR 11.10(i)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(i) Determination that persons who develop, maintain, or use electronic record/electronic signature systems have the education, training, and experience to perform their assigned tasks.

Interpretation

All users (including system administrators) must be trained before they are assigned tasks in the system. All users should be appropriately trained on the process regulated by the computer system.

Implementation

Describe any training that a person should receive before they are allowed to use this system. Include relevant SOPs, STMs, etc.

If you need training on 21 CFR 11 or validation, would like assistance assessing your training system systems to see they are compliant, or would need to proceduralize your training program, please contact us to arrange consultation services.

Compare this requirement with Annex 11 Section 2., Personnel.

Frequently Asked Questions

Q: How do we document adequate training?

A: Before being granted system access, a user should be trained according to your companies procedures for training. This training should be documented and retained. In addition, an organization should retain a CV for all employees and contractors who perform GxP operations.

Individuals who use FDA regulated computer systems should have the appropriate education, training, or experience to operate the system.

Text of 21 CFR 11.10(i)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(i) Determination that persons who develop, maintain, or use electronic record/electronic signature systems have the education, training, and experience to perform their assigned tasks.

Interpretation

All users (including system administrators) must be trained before they are assigned tasks in the system. All users should be appropriately trained on the process regulated by the computer system.

Implementation

Describe any training that a person should receive before they are allowed to use this system. Include relevant SOPs, STMs, etc.

If you need training on 21 CFR 11 or validation, would like assistance assessing your training system systems to see they are compliant, or would need to proceduralize your training program, please contact us to arrange consultation services.

Compare this requirement with Annex 11 Section 2., Personnel.

Frequently Asked Questions

Q: How do we document adequate training?

A: Before being granted system access, a user should be trained according to your companies procedures for training. This training should be documented and retained. In addition, an organization should retain a CV for all employees and contractors who perform GxP operations.

21 CFR 11.10(h): Input Checks

21 CFR 11.10(h): Input Checks

FDA regulated computer systems should have the appropriate controls in place to ensure that data inputs are valid. This verification is called an input check.

Text of 21 CFR 11.10(h)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(h) Use of device (e.g., terminal) checks to determine, as appropriate, the validity of the source of data input or operational instruction.

Interpretation

The system should be able to perform an input check to ensure the source of the data being input is valid. In some cases, this means a monitor should be available such that someone entering data can see what they entered. This can also mean that data is restricted to particular input devices or sources. Data should not be entered into a regulated computer system without the owner knowing the source of the data.

Implementation

Document how data is input into the system. If data is being collected from another external system, describe the connection to that source and how the system verifies the identity of the source data.

If you need more information or assistance with training on input checks or assessing your systems to see if they have adequate input checks, please contact us to arrange consultation services.

Compare this requirement with Annex 11 Section 6., Accuracy Checks.

Frequently Asked Questions

Q: Does every data field require verification before entry into the system?

A: In general, only critical fields require data verification. However, as much as a program can restrict extraneous data entry through drop-down lists, restricted numeric ranges, or date ranges, etc., will generally improve the quality of the data. When users are allowed to enter any possible value, they will enter any and all possible unexpected values.

Q: Do I need to specifically validate that my system accepts data from a keyboard or mouse?

A: Generally speaking, we document that the use of the keyboard and mouse is tested implicitly throughout the validation and do not create a specific test case to verify input from these devices. If a system uses another data entry source, such as a bar code reader, we generally do include a test to verify that data is successfully entered into the system.

FDA regulated computer systems should have the appropriate controls in place to ensure that data inputs are valid. This verification is called an input check.

Text of 21 CFR 11.10(h)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(h) Use of device (e.g., terminal) checks to determine, as appropriate, the validity of the source of data input or operational instruction.

Interpretation

The system should be able to perform an input check to ensure the source of the data being input is valid. In some cases, this means a monitor should be available such that someone entering data can see what they entered. This can also mean that data is restricted to particular input devices or sources. Data should not be entered into a regulated computer system without the owner knowing the source of the data.

Implementation

Document how data is input into the system. If data is being collected from another external system, describe the connection to that source and how the system verifies the identity of the source data.

If you need more information or assistance with training on input checks or assessing your systems to see if they have adequate input checks, please contact us to arrange consultation services.

Compare this requirement with Annex 11 Section 6., Accuracy Checks.

Frequently Asked Questions

Q: Does every data field require verification before entry into the system?

A: In general, only critical fields require data verification. However, as much as a program can restrict extraneous data entry through drop-down lists, restricted numeric ranges, or date ranges, etc., will generally improve the quality of the data. When users are allowed to enter any possible value, they will enter any and all possible unexpected values.

Q: Do I need to specifically validate that my system accepts data from a keyboard or mouse?

A: Generally speaking, we document that the use of the keyboard and mouse is tested implicitly throughout the validation and do not create a specific test case to verify input from these devices. If a system uses another data entry source, such as a bar code reader, we generally do include a test to verify that data is successfully entered into the system.

21 CFR 11.10(g): Authority Checks

FDA regulated computer systems should enforce user roles within a system. This process of verifying a user role within a system is called an authority check. For example, only a member of the QA group should be able to provide QA approval, and only a system administrator should be able to create a new user.

Text of 21 CFR 11.10(g)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(g) Use of authority checks to ensure that only authorized individuals can use the system, electronically sign a record, access the operation or computer system input or output device, alter a record, or perform the operation at hand.

Interpretation

The system should authorize users before allowing them to access or alter records. This may include different levels of security within the system. The number of security groups in a system will be dependent upon the complexity of the system and the amount of granularity that an organization requires for use of a computer system. For example, a laboratory instrument may have only a few user groups (Standard User, Tester, Administrator, etc.), while a large eDMS may have dozens of user groups.

Implementation

Document the levels of security within the system. Verify appropriate implementation of user-level security during the validation process.

If you need more information or assistance with training on authority checks or assessing your systems to see if they have adequate authority checks, please contact us to arrange consultation services.

Compare this requirement with Annex 11 Section 12, Security and 15., Batch Release.

Frequently Asked Questions

Q: At a minimum, how many security levels should our system have?

A: There should a General level to allow use of the system (add or edit records but no rights to delete records) and an Administrator level that can delete records or perform user administration tasks.

Text of 21 CFR 11.10(g)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(g) Use of authority checks to ensure that only authorized individuals can use the system, electronically sign a record, access the operation or computer system input or output device, alter a record, or perform the operation at hand.

Interpretation

The system should authorize users before allowing them to access or alter records. This may include different levels of security within the system. The number of security groups in a system will be dependent upon the complexity of the system and the amount of granularity that an organization requires for use of a computer system. For example, a laboratory instrument may have only a few user groups (Standard User, Tester, Administrator, etc.), while a large eDMS may have dozens of user groups.

Implementation

Document the levels of security within the system. Verify appropriate implementation of user-level security during the validation process.

If you need more information or assistance with training on authority checks or assessing your systems to see if they have adequate authority checks, please contact us to arrange consultation services.

Compare this requirement with Annex 11 Section 12, Security and 15., Batch Release.

Frequently Asked Questions

Q: At a minimum, how many security levels should our system have?

A: There should a General level to allow use of the system (add or edit records but no rights to delete records) and an Administrator level that can delete records or perform user administration tasks.

21 CFR 11.10(f): Operational System Checks

FDA regulated computer systems should have sufficient controls or operational system checks to ensure that users must follow required procedures. For example, if a computer system regulates the release of a manufactured product, the computer system should not authorize the release until the appropriate Quality approval has been provided.

Text of 21 CFR 11.10(f)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(f) Use of operational system checks to enforce permitted sequencing of steps and events, as appropriate.

Interpretation

The system should not allow steps to occur in the wrong order. For example, should it be necessary to create, delete, or modify records in a particular sequence, operational system checks would ensure that the proper sequence is followed. Another example would be system checks that prevent changes to a record after it has been reviewed and signed.

Implementation

Document how the computer system prevents steps from occurring in the wrong order. If it is necessary to create, delete, or modify records in a particular sequence, explain how operational system checks will ensure that the proper sequence of events is followed.

If you need more information or assistance with training on operational system checks or assessing your systems to see if they have adequate operational system checks, please contact us to arrange consultation services.

Frequently Asked Questions

Q: Can you provide some examples of operational system checks?

A: An operation system check is any system control that enforces a particular workflow. For example, when approving a batch release, a system might require an electronic signature from manufacturing and quality control before the batch status can be changed to released. Another system may have a requirement that once an electronic signature is attached to a record, the record can no longer be modified. In this case, applying the electronic signature would trigger a control locking the record from future edits until the electronic signature is removed.

Text of 21 CFR 11.10(f)

Persons who use closed systems to create, modify, maintain, or transmit electronic records shall employ procedures and controls designed to ensure the authenticity, integrity, and, when appropriate, the confidentiality of electronic records, and to ensure that the signer cannot readily repudiate the signed record as not genuine. Such procedures and controls shall include the following:

11.10(f) Use of operational system checks to enforce permitted sequencing of steps and events, as appropriate.

Interpretation

The system should not allow steps to occur in the wrong order. For example, should it be necessary to create, delete, or modify records in a particular sequence, operational system checks would ensure that the proper sequence is followed. Another example would be system checks that prevent changes to a record after it has been reviewed and signed.

Implementation

Document how the computer system prevents steps from occurring in the wrong order. If it is necessary to create, delete, or modify records in a particular sequence, explain how operational system checks will ensure that the proper sequence of events is followed.

If you need more information or assistance with training on operational system checks or assessing your systems to see if they have adequate operational system checks, please contact us to arrange consultation services.

Frequently Asked Questions

Q: Can you provide some examples of operational system checks?