Thursday, March 10, 2016

Uses of Controllers in JMeter

Uses of Controllers in JMeter

JMeter in-built function controller is basically a Logic Controller provides control on “when & how” to send a user request to a web server under test. Logic Controller having command of the order of the request sends to the server.

Logic Controllers

Logic Controllers facilitates users to describe the sequence of processing request sends to the server in a Thread like; JMeter’s Random Controllers can be used to send HTTP requests randomly to the server. Logic Controllers define the order in which user request are executed.

Some useful Logic Controllers comes in operation are,

• Recording Controller

• Simple Controller

• Loop Controller

• Random Controller

• Module Controller

Recording Controller

After recording of testing process happens in JMeter, Recording Controller role is to store those recordings.

Simple Controller

Simple Controller is a container, holds user request. It does not provide any customization, randomization or change of loop count, etc as other logic controllers do.

Loop Controller

Loop Controller creates a situation where user request runs either; for limited time or forever, which is better explained in the figure,

Random Controller

Random Controller creates a situation where user request runs in random order in each loop period. Suppose, the website http://www.google.com is having 3 user requests in following order,

1. HTTP request

2. FTP request

3. JDBC request

Each user request runs 5 times i.e,HTTP request runs 5 times, FTP request runs 5 times and JDBC request runs 5 times,means; total user requests send to the Google server is 15 = 5*3 by JMeter.

In each loop, user requests send sequentially in following order,

HTTP request -> FTP request-> JDBC request

In each loop, user requests send randomly in following order,

FTP request -> HTTP request-> JDBC request

Or

JDBC request -> FTP request-> HTTP request

Module Controller

Module Controller sets the modularity in each module of JMeter function stored in Simple Controller. For example; any web application consists of numbers of functions like; sign – in, sign – out, and account creation, password change, Simple Controller stores these functions as modules then Module Controller chooses which module needs to run.Suppose, simulation happens when 50 users sign-in, 100 users sign-out, and 30 users search on website www.google.com.

Using JMeter, user can create 3 modules where each module simulates each user activity: sign-in, sign-out, and Search. Module controller selects which module wants to run.

Some More Vital Controllers

Interleave Controller: Select only one user request which runs in one particular loop of thread.

Runtime Controller: Determines that till how long its children are permitted to run.For example; if user has detailed Runtime Controller 10 seconds then JMeter will run the test for 10 seconds.

Transaction Controller:Evaluates the overall time taken to complete a test execution

Include Controller: It is created to utilize an external test plan. This controller permits you to utilize multiple test plans in JMeter.

Brief Look on Loop Controller

Here you will get to know each and every step involved in adding Loop Controller to at present performance test plan.

The Loop Controller helps samplers run at specific number of times with the loop value you definite for the Thread Group. Suppose user, add one HTTP Request to a Loop Controller with a loop count 50, configure the Thread Group loop count to 2, and then JMeter will send a total of 50 * 2 = 100 HTTP Requests. These examples are explained below in better manner,

• Configuring Thread Group

• Adding Loop Controller

• Configuring Loop Controller

• Add View Result in Table

• Run the Test

First Step: Configuring Thread Group

Add Thread Group:

To add the Thread Group, first run JMeter, from opened interface of JMeter choose Test Plan from the tree and right click to choose Add -> Threads (Users) -> Thread Group.

After opening thread Group, enter Thread Properties as shown in figure below,

In the above figure, Number of Threads: 1 user is connected to target website, Loop Count: run it 2 times, and Ramp-Up Period: 1.

The Thread Count and The Loop Counts both are different. In the Thread Count, it simulates 1 user trying to connect the targeted website. In the Loop Counts, it simulates 1 user trying to connect the targeted website 2 times.

Add JMeter elements:

Here we will Add HTTP request Default” element.

To get this element, go to Thread Group and right-clicking, from context menu select Add -> Config Element -> HTTP Request Defaults.

In the HTTP Request Defaults page, enter the Website name (www.google.com) under Web server -> Server name or IP.

Adding Loop Controller:

To open the Loop Controller pane, right click on Thread Group -> Logic Controller -> Loop Controller.

Second Step: Configuring Loop Controller:

Given below figure shows Loop Count: 50 which means one user request sends to the web server google.com run 50 times.Jmeter with take loop value =2 specified for Thread Group in the above figure to send the total HTTP Requests: 2 * 50 = 100.

Right click on Loop Controller, from context menu, click on Add -> Sampler -> HTTP request.

Third Step: Add View Results in Table:

To view the test result in tabular format, right click on Add -> Listener -> View Result in Table

View Results in Table will display like given below figure,

Fourth Step: Run the test:

After opening View Results in Table, click on Start button on Menu bar (Ctrl+R) to run test.

Elements of JMeter

Elements of JMeter

In previous article we have cover How to install JMeter on your machine to start running your first JMeter script. The different part or component of JMeter is called Element which co-relates with each other but designed for different-different purpose. Before start working on Jmeter, it is best to know all components or Elements of Jmeter with full detail description. From this article you will get to know the Elements of JMeter and why they are useful in JMeter.

Given below figure presents you some common elements in JMeter,

• Test Plan

• Thread Group

• Controllers

• Listeners

• Timers

• Configuration Elements

• Pre-Processor Elements

• Post-Processor Elements

• Execution order of Test Elements

Test Plan

A test plan is the top level body of JMeter, explains sequence of steps execute at run time. A final test plan made up of one or more Thread Groups, Sampler, logic controllers, listeners, timers, assertions, and configuration elements. Each Sampler can be preceded by one or more Pre-processor element, followed by Post-processor element, and/or Assertion element. It saves in Java Management Extensions (JMX) format.

JMX is an open text based format, used across dissimilar systems. This format makes test plan to be open in a text editor and can use the “find and replace” function to speedily change a CMS name, web server name, or document ID that might appear hundreds of times throughout the test plan with very little determination.

Thread Group

Thread Group is an initial stage of test plan. The name, Thread Groups represent a group of Threads. Under this group, each thread simulates one real user requests to the server.

A control on a thread group facilitates to set the number of threads on each group. Also can control Ramp Up Time and the number of test iterations.

If you mention 10 numbers of threads then JMeter will generate and simulate 100 user requests to the server under test.

Controllers

There are two types of controllers: 1) Samplers 2) Logical Controllers

1) Samplers

Samplers facilitate JMeter to deliver explicit types of requests to the server. It simulates a user’s request for a page from the target server. So that, to avail POST, GET, DELETE functions on a HTTP service, user can add HTTP Request sampler. Apart from HTTP Request sampler, there are other simpler too,

• HTTP Request

• FTP Request

• JDBC Request

• Java Request

• SOAP/XML Request

• RPC Requests

An HTTP Request Sampler looks like this figure,

2) Logical Controllers

Logic Controllers decide the order of processing of Samplers in a Thread. It offers a mechanism to control the flow of the thread group. Logic Controllers facilitates to customize the logic that JMeter uses to resolve when to send requests. Logic Controllers can alter the order of requests coming from their child elements.

Logic Controllers of JMeter provide,

• Runtime Controller

• IFController

• Transaction Controller

• Recording Controller

• Simple Controller

• While Controller

• Switch Controller

• ForEach Controller

• Module Controller

• Include Controller

• Loop Controller

• Once Only Controller

• Interleave Controller

• Random Controller

• Random Order Controller

• Throughput Controller

A ForEach Controller Control Panel looks like the following figure,

Test Fragments

Test Fragments is a different type of element located at the same level as Thread Group element. It is eminent from a Thread Group in that it is not performed unless it is referenced by either a Module Controller or an Include_Controller. This element is morally for code re-use within Test Plans.

Listeners

Listeners facilitates viewers to view Samplers result in the form of tables, graphs, trees or simple text in some log files and also give pictorial access to the data collected by JMeter about those test cases as a Sampler component of JMeter is executed. Listeners offer a means to collect, save, and view the results of a test plan and store results in XML format, or a more efficient (but less detailed) CSV format. Their output can also be viewed directly within the JMeter console.

It basically adjusts anywhere in the test, moreover under the test plan, gather data only from elements at or below their level.

Listeners of JMeter provide these many things,

• Graph Results

• Spline Visualizer

• Assertion Results

• Simple Data Writer

• Monitor Results

• Distribution Graph (alpha)

• Aggregate Graph

• Mailer Visualizer

• BeanShell Listener

• Summary Report

• Sample Result Save Configuration

• Graph Full Results

• View Results Tree

• Aggregate Report

• View Results in Table

Timers

To perform load/stress testing on your application, you are using threads, controllers and samplers then JMeter will just blast your application with nonstop requests. This is not the real environment or characteristic of the real traffic. JMeter thread sends request without stopping between each sampler. This not exactly what you want. We can add a timer element which will permit us to define a period to wait between each request.

So, to simulate real traffic or create extreme load/stress – orderly, JMeter provides you Timer elements.

Just have a look on the types of Timers available,

• Synchronizing Timer

• JSR223 Time

• BeanShell Time

• Gaussian Random Timer

• Uniform Random Timer

• Constant Throughput Timer

• BSF Time

• Poisson Random Time

As an example, the Constant Timer Control Panel looks like this:

Configuration Elements

Configuration Element is a simple element where you can collects the corporate configuration values of all samplers like webserver’s hostname or database url etc.

These are some commonly used configuration elements in JMeter,

• Java Request Defaults

• LDAP Request Defaults

• LDAP Extended Request Defaults

• Keystore Configuration

• JDBC Connection Configuration

• Login Config Element

• CSV Data Set Config

• FTP Request Defaults

• TCP Sampler Config

• User Defined Variables

• HTTP Authorization Manager

• HTTP Cache Manager

• HTTP Cookie Manager

• HTTP Proxy Server

• HTTP Request Defaults

• HTTP Header Manager

• Simple Config Element

• Random Variable

Pre-Processor Elements

A Pre-Procesor is an interesting thing which executes before a sampler executes. It is basically used to modify the settings of a Sample Request before it runs, or to update variables that are not extracted from response text.

These are the list made up of all the Pre-Processor Elements JMeter,

• JDBC PreProcessor

• JSR223 PreProcessor

• RegEx User Parameters

• BeanShell PreProcessor

• BSF PreProcessor

• HTML Link Parser

• HTTP URL Re-writing Modifier

• HTTP User Parameter Modifier

• User Parameters

Post-Processor Elements

A Post Processor gets executed after finishing a sampler execution process. This element is basically useful to procedure the response data, for example, to retrieve particular value for later use.

These are the list made up of all the Post-Processor Elements JMeter,

• CSS/JQuery Extractor

• BeanShell PostProcessor

• JSR223 PostProcessor

• JDBC PostProcessor

• Debug PostProcessor

• Regular Expression Extractor

• XPath Extractor

• Result Status Action Handler

• BSF PostProcessor

Test Elements, Execution series

These are the execution order of the test plan elements,

• Configuration elements

• Pre-Processors

• Timers

• Sampler

• Post-Processors (unless SampleResult is null)

• Assertions (unless SampleResult is null)

• Listeners (unless SampleResult is null)

Introduction to Apache JMeter

Introduction to Apache JMeter

Apache JMeter is a great open source application with awesome testing abilities. Web Server is a platform which carries loads of numbers of applications and users, so that it is necessary to know that how does it works or performs means; how effective it is to handle simultaneous users or applications.

For example; how the “Gmail” supporting server will perform when numbers of users simultaneous access the Gmail account – basically have to do performance testing using performance testing tools like JMeter, Loadrunner etc.

To check the high performance of the application or server, do high performance testing using JMeter for exceptional results.

Before understanding Overview of JMeter, let us have a look on three testing approach,

Performance Test: This test provides the best possible performance of the system or application under a given configuration of infrastructure. Very fast, it also highlights the change need to be made before application goes into production.

Load Test: This test is useful for determining and preceding the system under the top load it was aimed to work under.

Stress Test: This test tries to break the system by crushing its resources.

Introduction to JMeter

JMeter design is based on plugins. Most of its “out of the box” features are applied with plugins. Off-site developers can simply spread JMeter with custom plugins.

JMeter works on these many protocols: Web Services – SOAP / XML-RPC, Web – HTTP, HTTPS sites ‘web 1.0′ web 2.0 (ajax, flex and flex-ws-amf), Database – JDBC drivers, Directory – LDAP, Messaging Oriented service – JMS, Service – POP3, IMAP, SMTP, FTP.

Features Supported by JMeter:

People really think of that why they should go for JMeter? So, to clear their drought, let us get to know JMeter awesome features,

Open source application: JMeter is a free open source application which facilitates users or developers to use source code for other development or modification purpose.

User – friendly GUI: JMeter comes with natural GUI. It is very simple and easy to use and users get familiar very soon with it.

Platform independent: Although, it is totally a Java based desktop application. That’s why; it can run on any platform. It is highly extensible and capable to load the performance test in many different server types: Web – HTTP, HTTPS, SOAP, Database via JDBC, LDAP, JMS, Mail – POP3.

Write your own test: Using plugins, write your own test case to extend the testing process. JMeter uses text editor to create a test plan and supplies in XML format.

Support various testing approach: JMeter supports various testing approach such as Load Testing, Distributed Testing, and Functional Testing, etc.

Simulation: JMeter can simulate various users with simultaneous threads, generate heavy load against web application under test.

Support multi-protocol: JMeter works on web application testing and database server testing, and also supports protocols such as HTTP, JDBC, LDAP, SOAP, JMS, and FTP.

Script Test: JMeter is capable to perform automation testing using Bean Shell &Selenium.

Totally multi-threading framework: It’s a full multi-threading framework permits simultaneous sampling by many threads and simultaneous sampling of different functions by distinct thread groups.

Easily understandable test result: It visualizes the test result in a format such as chart, table, tree and log file are very simple to understand.

Easy installation step: Just run the“*.bat” file to use JMeter. In Linux/Unix – JMeter can be approached by clicking on JMeter shell script. In Windows – JMeter can be approached by starting the JMeter.bat file.

JMeter architecture working process:

JMeter simulates number of users send request to an appropriate server which shows the performance/functionality of an appropriate server / application via tables, graphs etc. The figure below depicts this process:

Testing performance of web page with Apache JMeter

Topics covered in this article:

1. Step by step method to test single web page in Apache JMeter

2. Manually creating a test plan in Apache JMeter

3. Simulating 50 users through Apache JMeter test plan

Let us see what we want to achieve first. We want to create a Test Plan in Apache JMeter so that we can test performance of one web page say page shown by the URL: http://www.google.com/. We will need many elements in the JMeter Test plan let us see what are they:

So this is what we shall see when we are done with creating the Test Plan. We have to start with a blank JMeter window

Before adding anything more to this window let us change the name of Test Plan. We can type a new name for the node in the Name text box. In this version of JMeter the name does not get reflected immediately when you type it in the text box. You have to change focus to workbench node and back to Test Plan node to see the name getting reflected.

Let us give new name "First Test" to the Test Plan node

Now we will add our first element in the window. We will add one Thread Group, which is placeholder for all other elements like Samplers, Controllers, Listeners. We need one so we can configure number of users to simulate. The Thread Group represents one set of actions performed by User.

In JMeter all the node elements are added by using the context menu. You have to right click the element where you want to add a child element node. Then choose the appropriate option to add.

We will click the Thread Group option and it will get added under the Test Plan (First test) node.

Like we changed the name of Test Plan let us also change the name of Thread Group. For us this is the element which represents multiple users. So let us name it "Users". For us this element means Users visiting the Google Home Page.

Now we have to add one Sampler in our Thread Group (Users). As done earlier for adding Thread group, this time we will open the context menu of the Thread Group (Users) node by right clicking and we will add HTTP Request Sampler by choosing Add > Sampler > HTTP request option.

This will add one empty HTTP Request Sampler under the Thread Group (Users) node.

We will need most configurations in this node element, HTTP Request Sampler. The settings we have to configure are:

Name: we will change the name to reflect the action what we want to achieve

This will will name "Visit Google Home Page"

Server Name or IP: here we have to type the web server name

In out case it is www.google.com

Note http:// part is not written this is only the name of the server or its IP

Protocol: we will keep this blank

That will mean we want HTTP as the protocol

Path: we will type path as / (slash)

This means we want the root page of the server

This also means now the server will decide what page will be sent to us and it is the default page of the web site

After add the HTTP Request Sampler we will need one Listener. Let us add View Results Tree Listener under the Thread Group (User) node. This will ensure that the results of the Sampler will be available to view in this Listener node element.

Adding a listener is also same like adding a new node under Thread Group. Open the context menu and choose Add > Listener > View Results Tree option to add the listener.

With this much of setup we are now ready to run our first test. Coming back to the configuration of the Thread Group (Users) we have kept it all default values. This means JMeter will execute the sampler only once. It will be like a single user and only one time.

This is similar to like a user visiting a web page through browser, only here we are doing that through JMeter sampler. We will execute the test plan using Run > Start option.

Apache JMeter asks us to save the test plan in a disk file before actually starting the test. This is important if we want to run the test plan again and again. If we say not to save by clicking No option it will run without saving. Let us save it first so we can reuse it again.

We have kept the setting of the thread group as single thread (that means one user only) and loop for 1 time (that means run only one time), hence we will get result of one single transaction in the View Result Tree Listener.

This means JMeter was successful in fetching the web page at the given URL just like a browser. It has stored all the headers and the response sent by the web server and ready to show us the result in many ways.

Let us take a closer look at the three tabs available in the View Result Tree Listener view.

The first tab is Sampler Results. It shows JMeter data as well as data returned by the web server. Usually browsers hide this data from us as it is not related to showing the web page. This data is utilized internally by the browser, for example when there is a Response Code: 404 then the browser shows a page not found error page, and when the Response Code is 200 the browser shows the received web page HTML.

The second tab is Request, where all the data which was sent to the web server as part of the request is shown.

The last tab is Response data. In this tab the listener shows the data received from server as it is in text format. It also have facility to show the data in XML, HTML formats but that will be just the data in other way (no JavaScript will be executed). This may look different that the page actually seen in the browser.

We can select different ways of viewing this response data like as below:

This was just one request but JMeter's real strength is in sending the same request like many users are sending it. To test the web servers with multiple users we will have to change the Thread Group (Users) settings.

We will set 50 users and these requests will be sent with some delay of (10/50) seconds as we have set the ramp up time to 10.

To visualize the results, a better aggregating listener will be required so we will add Summary Report Listener in the Thread Group node. Adding Summary Report Listener is similar to adding a View Results Tree Listener. Just right click on the Thread group node and choose Add > Listener > Summary Report.

We will run the test plan again after adding the Summary Report Listener ans when the test is done we will see the following results. Your results may be different than mine due to different environments but mostly similar.

This is how we tried testing one single page with JMeter. The summary report shows values about the measurement JMeter has done while calling the same page as if many users are calling the page. Let us look at some of the value headings and what they mean:

• Samples: A sample mean one sampler call. One request to web page in our case. So the value of 51 means total 51 web page requests to the page http://www.google.com were made by JMeter

• Average: This value is the average time taken to receive the web pages. In our case there were 51 values of receiving time which were added and divided by 51 and this value is arrived by JMeter. This value is a measure of performance of this web page. This means on an average 334 milliseconds time is required to receive this web page for our network conditions.

• Min and Max: These are the minimum and maximum value of time required for receiving the web page.

• Std. Dev: This shows how many exceptional cases were found which were deviating from the average value of the receiving time. The lesser this value more consistent the time pattern is assumed.

• Error %: This value indicated the percentage of error. In our case 51 calls were made and all are received successfully this means 0 error. If there are any calls not received properly they are counted as errors and the percentage of error occurrence against the actual calls made is displayed in this value.

To check the performance of the web page the average value of receiving the page is an important parameter to check.

Tuesday, March 8, 2016

Thursday, March 3, 2016

Validation Activities Related to the SaaS Application

Validation Activities Related to the SaaS Application

Overview

Software-as-a-Service (SaaS) model, which has been defined as the situation wherein a company uses a vendor’s software application from devices through a web browser or program interface. The client does not manage or control the underlying cloud infrastructure; including the network, servers, operating systems, storage, or even individual application capabilities; with the possible exception of application configuration settings.

SaaS and the Life Science Industry

In the life science industry, there may be a number of SaaS products throughout the organization, including customer relationship management systems, human resource information systems, laboratory information management systems, learning management systems, quality management systems, and other areas. For this reason, given that validation of a SaaS application is a shared responsibility between the client and the SaaS vendor at the deployment phase and as the software is periodically upgraded, IT and validation teams must consider new validation approaches to SaaS applications.

With a SaaS application, the vendor’s engineering and IT personnel are responsible for designing and maintaining the internal data center, computer hardware, software, security, disaster recovery, and so on. In this paper, we outline the typical steps performed by the company’s quality assurance (QA), IT, and validation teams as well as the expectations of the SaaS vendor. The shift of certain steps to the vendor makes the vendor audit a critical activity for the client’s validation, auditing, IT, and QA team, so we will share best practices related to that audit as well.

FDA Validation Overview

According to the US Food and Drug Administration, computer system validation is the formalized documented process for testing computer software and systems as required by the Code of Federal Regulations (21 CFR 11.10.a).

To be compliant and reduce the risk of an FDA Warning Letter, life science companies must validate all of their software, databases, spreadsheets, and computer systems and develop the appropriate documentation for all phases of the software development lifecycle (SDLC).

FDA validation guidance and the SDLC describe user site software validation in terms of installation qualification (IQ), in which documentation verifies that the system is installed according to written specifications; operational qualification (OQ), in which documentation verifies that the system operates throughout all operating ranges; and performance qualification (PQ), in which tests must span the underlying current good manufacturing practice (cGMP) business process.

In a similar way, EU Annex 11 also focuses on the product lifecycle and “user requirements” related to each system. However, Annex 11 places more emphasis on “people” and management accountability than FDA regulations—specifically, the individuals with responsibility for the business process and system maintenance.

Validation Activities for SaaS Applications

To address these regulatory obligations, life science companies typically perform a number of activities: creating the solution design document (SDD); developing the user and functional requirements; developing test scripts; and conducting IQ, OQ, and PQ testing, among others. This effort has the potential to dry up the IT and validation teams’ bandwidth, adding many months to the software implementation or enhancement project. In some cases, a company may not have the qualified resources to accomplish the many validation tasks.

One of the appeals of the SaaS application is that a company can shift some of the validation effort to the SaaS vendor. This enables the company’s validation team to initially focus on an audit of the vendor’s data center along with their QA and validation methodology to ensure these activities are performed at the same standard as would be performed by the client’s own QA and validation teams. Typically, the three days or “less/more” spent auditing the SaaS vendor can dramatically reduce the time spent validating the system.

Audit results may be incorporated into a risk assessment to leverage vendor-supplied documentation. In many cases, following an audit of the data center and SDLC methodology, a client’s audit and validation team will then develop the core validation documents:

• Validation Plan: Describes the internal activities that are of part of the overall validation approach to be conducted by the client.

• User Requirements Specification: Provides details on the functionality that the clients demand from the SaaS application. These required items are often categorized as “critical,” “mandatory,” or “nice to have” and are the basis for the validation/testing effort.

• User Acceptance Test Scripts: Clients should have their own test scripts that either augment vendor-owned QA test scripts or unique scripts that demonstrate that the company has tested specific usage of the system.

• Validation of Configurations and Customizations: Clients often test any unique software configurations or customized programming being developed by the vendor for the company.

• Traceability Matrix: Used for mapping user requirements to vendor documentation, UAT test scripts, and internal procedure documents.

• System Governance Procedures: May include system administration and change control for maintaining a validated state during the systems use and operation.

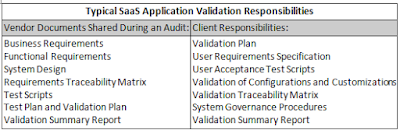

The following table summarizes the typical responsibilities as described above.

Auditing the SaaS Vendor: Best Practices

Clients often conduct an audit of the SaaS vendor at the vendor’s primary location, so the vendor can demonstrate adherence to quality software engineering and testing principles. The client’s validation teams will typically review QA documentation and project files that were developed as part of the design, development, testing, and implementation of the application.

Although qualifying a vendor by audit is not a new practice, as the SaaS model is more widely implemented, the method in which clients qualify the SaaS vendor must evolve to be focused on the software lifecycle process.

The company should ensure that the vendor’s quality procedures demonstrate effective planning, operation, and control of its processes and documentation. The vendor’s QA team will need to present that a valid development methodology is in place and that thorough testing was used to provide the client with confidence and assurance that the application is fit-for-production use. Furthermore, the vendor’s QA team must demonstrate that it is involved with the following activities:

• SDLC stages

• Business rules, graphical user interface design elements, and interoperability with existing features

• Test traceability and full product testing before any software enhancements are released to a production environment.

For example, below is a list of typical validation documents that SaaS vendors will be asked to present during an audit:

• Business Requirements: Focuses on the needs of the user and describes exactly what the system will do.

• Functional Requirements: Focuses on how the system will do what the user is expecting.

• System Design: Focuses on capturing the system design based on the functional requirements. It depicts screen layout, system functions, and other aspects of the user experience to fulfill the business requirements.

• Requirements Traceability Matrix: Captures the relationship between the business requirements and the system requirements, system design, and test scripts that satisfy them; this document should reflect the latest enhancements made to the platform. The SaaS vendor should ensure that documents are tracked through the use of a traceability matrix that maps the business requirements.

• Test Scripts: Used for new enhancements or custom projects. These scripts should cover basic platform functionality, audit trail functionality, reporting tools, and other high priority areas for the client. In addition, custom testing should be executed for any custom programming performed.

• Test Plan and Validation Plan: May be adopted by the vendor as standard operating procedures because the SaaS application is often multi-tenant.

• Validation Summary Report (VSR): This report summarizes vendor testing activities, discrepancies, and other validation activities.

Other key documentation that SaaS vendors may be asked to present during an audit are:

• Product release documentation

• Project management processes

• Corrective and preventive action processes

• Service delivery processes

• Product support/maintenance (including back-up processes)

• Issue tracking processes

• Internal Audits—Vendors should periodically audit their business processes and controls

• Control—Vendors should show control of system documentation and change management

• Security—Security of buildings, hardware, documents etc.

Key Questions to Ask Vendors Related to Electronic Signatures and Audit Trails

When a life science company evaluates SaaS applications, the company should develop a standard vendor questionnaire, which may include questions such as the ones below in these general categories.

Governance/System Access

• Can access to the system be controlled by qualified personnel who will be responsible for the content of the electronic records that are on the system?

• Does the vendor have a 21 CFR Part 11 assessment in place?

• Does the vendor have a distinct qualification documentation process in place, addressing different level of requirements and design

documentation?

• Does the vendor have a structured change approach in place for infrastructure, applications and interfaces, change process coverage (planning, execution, and documentation of changes), change logs, and differently involved roles?

Audit Trails

• Does the SaaS application generate an electronic audit trail for operator entries and actions that create, modify, or delete electronic records?

• Are date and time stamps applied automatically (as opposed to typed in manually by the user)?

• Is the source and format of the system date and time-defined, controlled, unambiguous, and protected from unauthorized changes?

• Do the printed name, date, time, and signature meaning appear in every printable form of the electronic record?

Electronic Signatures

• Will the electronic signature be unique to one individual and not be reused or reassigned to anyone else?

• Is the signed electronic records information shown both electronically and on printed records for the electronic signature?

Documentation

• Does the validation documentation demonstrate that 21 CFR Part 11 requirements have been met and the controls are functioning correctly?

• Is the architecture (including hardware, software, and documentation) easily available to auditors?

• Does the vendor have processes in place for documents to ensure documentation control and to maintain integrity?

• Does the vendor have a change control processes for system documentation?

Summary

Validation of a SaaS application has the potential to improve its time-to-deployment and reduce the validation effort and overall costs for the life science organization. However, the validation team within the organization must expect the same commitment to validation activities from the SaaS vendor. To summarize, here are the key steps that can ensure a successful SaaS application validation project:

1. Audit/assessment of supplier’s capabilities, quality system, SDLC, customer support, and electronic record-keeping controls

2. Development of the user requirement specifications and validation plan

3. Configuration of supplier system to meet user requirements (configuration specification)

4. Vendor testing and release to customer

5. Personnel training

6. User acceptance testing/traceability matrix

7. Develop system administration, operation, and maintenance SOPs

8. Validation summary report and release of system for production use.

Monday, December 21, 2015

Web Server and ASP.NET Application Life Cycle in Depth

Web Server and ASP.NET Application Life Cycle in Depth

Introduction

In this article, we will try to understand what happens when a user submits a request to an ASP.NET web app. There are lots of articles that explain this topic, but none that shows in a clear way what really happens in depth during the request. After reading this article, you will be able to understand:

• What is a Web Server

• HTTP - TCP/IP protocol

• IIS

• Web communication

• Application Manager

• Hosting environment

• Application Domain

• Application Pool

• How many app domains are created against a client request

• How many HttpApplications are created against a request and how I can affect this behaviour

• What is the work processor and how many of it are running against a request

• What happens in depth between a request and a response

Start from scratch

All the articles I have read usually begins with "The user sends a request to IIS... bla bla bla". Everyone knows that IIS is a web server where we host our web applications (and much more), but what is a web server?

Let start from the real beginning.

A Web Server (like Internet Information Server/Apache/etc.) is a piece of software that enables a website to be viewed using HTTP. We can abstract this concept by saying that a web server is a piece of software that allows resources (web pages, images, etc.) to be requested over the HTTP protocol. I am sure many of you have thought that a web server is just a special super computer, but it's just the software that runs on it that makes the difference between a normal computer and a web server.

As everyone knows, in a Web Communication, there are two main actors: the Client and the Server.

The client and the server, of course, need a connection to be able to communicate with each other, and a common set of rules to be able to understand each other. The rules they need to communicate are called protocols. Conceptually, when we speak to someone, we are using a protocol. The protocols in human communication are rules about appearance, speaking, listening, and understanding. These rules, also called protocols of conversation, represent different layers of communication. They work together to help people communicate successfully. The need for protocols also applies to computing systems. A communications protocol is a formal description of digital message formats and the rules for exchanging those messages in or between computing systems and in telecommunications.

HTTP knows all the "grammar", but it doesn't know anything about how to send a message or open a connection. That's why HTTP is built on top of TCP/IP. Below, you can see the conceptual model of this protocol on top of the HTTP protocol:

TCP/IP is in charge of managing the connection and all the low level operations needed to deliver the message exchanged between the client and the server.

In this article, I won't explain how TCP/IP works, because I should write a whole article on it, but it's good to know it is the engine that allows the client and the server to have message exchanges.

HTTP is a connectionless protocol, but it doesn't mean that the client and the server don't need to establish a connection before they start to communicate with each other. But, it means that the client and the server don't need to have any prearrangement before they start to communicate.

Connectionless means the client doesn't care if the server is ready to accept a request, and on the other hand, the server doesn't care if the client is ready to get the response, but a connection is still needed.

In connection-oriented communication, the communicating peers must first establish a logical or physical data channel or connection in a dialog preceding the exchange of user data.

Now, let's see what happens when a user puts an address into the browser address bar.

• The browser breaks the URL into three parts:

o The protocol ("HTTP")

o The server name (www.Pelusoft.co.uk)

o The file name (index.html)

• The browser communicates with a name server to translate the server name "www.Pelusoft.co.uk" into an IP address, which it uses to connect to the server machine.

• The browser then forms a connection to the server at that IP address on port 80. (We'll discuss ports later in this article.)

• Following the HTTP protocol, the browser sents a GET request to the server, asking for the file "http://www.pelusoft.co.uk.com/index.htm". (Note that cookies may be sent from the browser to the server with the GET request -- see How Internet Cookies Work for details.)

• The server then sents the HTML text for the web page to the browser. Cookies may also be sent from the server to the browser in the header for the page.)

• The browser reads the HTML tags and formats the page onto your screen.

The current practice requires that the connection be established by the client prior to each request, and closed by the server after sending the response. Both clients and servers should be aware that either party may close the connection prematurely, due to user action, automated time-out, or program failure, and should handle such closing in a predictable fashion. In any case, the closing of the connection by either or both parties always terminates the current request, regardless of its status.

At this point, you should have an idea about how the HTTP - TCP/IP protocol works. Of course, there is a lot more to say, but the scope of this article is just a very high view of these protocols just to better understand all the steps that occur since the user starts to browse a web site.

Now it's time to go ahead, moving the focus on to what happens when the web server receives the request and how it can get the request itself.

As I showed earlier, a web server is a "normal computer" that is running a special software that makes it a Web Server. Let's suppose that IIS runs on our web server. From a very high view, IIS is just a process which is listening on a particular port (usually 80). Listening means it is ready to accept a connections from clients on port 80. A very important thing to remember is: IIS is not ASP.NET. This means that IIS doesn't know anything about ASP.NET; it can work by itself. We can have a web server that is hosting just HTML pages or images or any other kind of web resource. The web server, as I explained earlier, has to just return the resource the browser is asking for.

ASP.NET and IIS

The web server can also support server scripting (as ASP.NET). What I show in this paragraph is what happens on the server running ASP.NET and how IIS can "talk" with the ASP.NET engine. When we install ASP.NET on a server, the installation updates the script map of an application to the corresponding ISAPI extensions to process the request given to IIS. For example, the "aspx" extension will be mapped to aspnet_isapi.dll and hence requests for an aspx page to IIS will be given to aspnet_isapi (the ASP.NET registration can also be done using Aspnet_regiis). The script map is shown below:

The ISAPI filter is a plug-in that can access an HTTP data stream before IIS gets to see it. Without the ISAPI filter, IIS cannot redirect a request to the ASP.NET engine (in the case of a .aspx page). From a very high point of view, we can think of the ISAPI filter as a router for IIS requests: every time there is a resource requested whose file extension is present on the map table (the one shown earlier), it redirect the request to the right place. In the case of an .aspx page, it redirects the request to the .NET runtime that knows how to elaborate the request. Now, let's see how it works.

When a request comes in:

• IIS creates/runs the work processor (w3wp.exe) if it is not running.

• The aspnet_isapi.dll is hosted in the w3wp.exe process. IIS checks for the script map and routes the request to aspnet_isapi.dll.

• The request is passed to the .NET runtime that is hosted into w3wp.exe as well.

Finally, the request gets into the runtime

This paragraph focuses on how the runtime handles the request and shows all the objects involved in the process.

First of all, let's have a look at what happens when the request gets to the runtime.

• When ASP.NET receives the first request for any resource in an application, a class namedApplicationManager creates an application domain. (Application domains provide isolation between applications for global variables, and allow each application to be unloaded separately.)

• Within an application domain, an instance of the class named Hosting Environment is created, which provides access to information about the application such as the name of the folder where the application is stored.

• After the application domain has been created and the Hosting Environment object instantiated, ASP.NET creates and initializes core objects such as HttpContext, HttpRequest, and HttpResponse.

• After all core application objects have been initialized, the application is started by creating an instance of the HttpApplication class.

• If the application has a Global.asax file, ASP.NET instead creates an instance of the Global.asax class that is derived from the HttpApplication class and uses the derived class to represent the application.

Those are the first steps that happens against a client request. Most articles don't say anything about these steps. In this article, we will analyze in depth what happens at each step.

Below, you can see all the steps the request has to pass though before it is elaborated.

Application Manager

The first object we have to talk about is the Application Manager.

Application Manager is actually an object that sits on top of all running ASP.NET AppDomains, and can do things like shut them all down or check for idle status.

For example, when you change the configuration file of your web application, the Application Manager is in charge to restart the AppDomain to allow all the running application instances (your web site instance) to be created again for loading the new configuration file you may have changed.

Requests that are already in the pipeline processing will continue to run through the existing pipeline, while any new request coming in gets routed to the new AppDomain. To avoid the problem of "hung requests", ASP.NET forcefully shuts down the AppDomain after the request timeout period is up, even if requests are still pending.

Application Manager is the "manager", but the Hosting Environment contains all the "logic" to manage the application instances. It's like when you have a class that uses an interface: within the class methods, you just call the interface method. In this case, the methods are called within the Application Manager, but are executed in the Hosting Environment (let's suppose the Hosting Environment is the class that implements the interface).

At this point, you should have a question: how is it possible the Application Manager can communicate with the Hosting Environment since it lives in an AppDomain? (We said the AppDomain creates a kind of boundary around the application to isolate the application itself.) In fact, the Hosting Environment has to inherit from theMarshalByRefObject class to use Remoting to communicate with the Application Manager. The Application Manager creates a remote object (the Hosting Environment) and calls methods on it.

So we can say the Hosting Environment is the "remote interface" that is used by the Application Manager, but the code is "executed" within the Hosting Environment object.

HttpApplication

On the previous paragraph, I used the term "Application" a lot. HttpApplication is an instance of your web application. It's the object in charge to "elaborate" the request and return the response that has to be sent back to the client. An HttpApplication can elaborate only one request at a time. However, to maximize performance, HttpApplication instances might be reused for multiple requests, but it always executes one request at a time.

This simplifies application event handling because you do not need to lock non-static members in the application class when you access them. This also allows you to store request-specific data in non-static members of the application class. For example, you can define a property in the Global.asax file and assign it a request-specific value

You can't manually create an instance of HttpApplication; it is the Application Manager that is in charge to do that. You can only configure what is the maximum number of HttpApplications you want to be created by the Application Manager. There are a bunch of keys in the machine config that can affect the Application Manager behaviour:

processModel enable="true|false"

timeout="hrs:mins:secs|Infinite"

idleTimeout="hrs:mins:secs|Infinite"

shutdownTimeout="hrs:mins:secs|Infinite"

requestLimit="num|Infinite"

requestQueueLimit="num|Infinite"

restartQueueLimit="num|Infinite"

memoryLimit="percent"

webGarden="true|false"

cpuMask="num"

userName=""

password=""

logLevel="All|None|Errors"

clientConnectedCheck="hrs:mins:secs|Infinite"

comAuthenticationLevel="Default|None|Connect|Call|

Pkt|PktIntegrity|PktPrivacy"

comImpersonationLevel="Default|Anonymous|Identify|

Impersonate|Delegate"

responseDeadlockInterval="hrs:mins:secs|Infinite"

responseRestartDeadlockInterval="hrs:mins:secs|Infinite"

autoConfig="true|false"

maxWorkerThreads="num"

maxIoThreads="num"

minWorkerThreads="num"

minIoThreads="num"

serverErrorMessageFile=""

pingFrequency="Infinite"

pingTimeout="Infinite"

maxAppDomains="2000"

With maxWorkerThreads and minWorkerThreads, you set up the minimum and maximum number ofHttpApplications.

For more information, have a look at: ProcessModel Element.

Just to clarify what we have said until now, we can say that against a request to a WebApplication, we have:

• A Worker Process w3wp.exe is started (if it is not running).

• An instance of ApplicationManager is created.

• An ApplicationPool is created.

• An instance of a Hosting Environment is created.

• A pool of HttpAplication instances is created (defined with the machine.config).

Until now, we talked about just one WebApplication, let's say WebSite1, under IIS. What happens if we create another application under IIS for WebSite2?

• We will have the same process explained above.

• WebSite2 will be executed within the existing Worker Process w3wp.exe (where WebSite1 is running).

• The same Application Manager instance will manage WebSite2 as well. There is always an instance per Work Proces w3wp.exe.

• WebSite2 will have its own AppDomain and Hosting Environment.

It's very important to notice that each web application runs in a separate AppDomain so that if one fails or does something wrong, it won't affect the other web apps that can carry on their work. At this point, we should have another question:

What would happen if a Web Application, let's say WebSite1, does something wrong affecting the Worker Process (even if it's quite difficult)?

What if I want to recycle the application domain?

To summarize what we have said, an AppPool consists of one or more processes. Each web application that you are running consists of (usually, IIRC) an Application Domain. The issue is when you assign multiple web applications to the same AppPool, while they are separated by the Application Domain boundary, they are still in the same process (w3wp.exe). This can be less reliable/secure than using a separate AppPool for each web application. On the other hand, it can improve performance by reducing the overhead of multiple processes.

An Internet Information Services (IIS) application pool is a grouping of URLs that is routed to one or more worker processes. Because application pools define a set of web applications that share one or more worker processes, they provide a convenient way to administer a set of web sites and applications and their corresponding worker processes. Process boundaries separate each worker process; therefore, a web site or application in an application pool will not be affected by application problems in other application pools. Application pools significantly increase both the reliability and manageability of a web infrastructure.

Introduction

In this article, we will try to understand what happens when a user submits a request to an ASP.NET web app. There are lots of articles that explain this topic, but none that shows in a clear way what really happens in depth during the request. After reading this article, you will be able to understand:

• What is a Web Server

• HTTP - TCP/IP protocol

• IIS

• Web communication

• Application Manager

• Hosting environment

• Application Domain

• Application Pool

• How many app domains are created against a client request

• How many HttpApplications are created against a request and how I can affect this behaviour

• What is the work processor and how many of it are running against a request

• What happens in depth between a request and a response

Start from scratch

All the articles I have read usually begins with "The user sends a request to IIS... bla bla bla". Everyone knows that IIS is a web server where we host our web applications (and much more), but what is a web server?

Let start from the real beginning.

A Web Server (like Internet Information Server/Apache/etc.) is a piece of software that enables a website to be viewed using HTTP. We can abstract this concept by saying that a web server is a piece of software that allows resources (web pages, images, etc.) to be requested over the HTTP protocol. I am sure many of you have thought that a web server is just a special super computer, but it's just the software that runs on it that makes the difference between a normal computer and a web server.

As everyone knows, in a Web Communication, there are two main actors: the Client and the Server.

The client and the server, of course, need a connection to be able to communicate with each other, and a common set of rules to be able to understand each other. The rules they need to communicate are called protocols. Conceptually, when we speak to someone, we are using a protocol. The protocols in human communication are rules about appearance, speaking, listening, and understanding. These rules, also called protocols of conversation, represent different layers of communication. They work together to help people communicate successfully. The need for protocols also applies to computing systems. A communications protocol is a formal description of digital message formats and the rules for exchanging those messages in or between computing systems and in telecommunications.

HTTP knows all the "grammar", but it doesn't know anything about how to send a message or open a connection. That's why HTTP is built on top of TCP/IP. Below, you can see the conceptual model of this protocol on top of the HTTP protocol:

TCP/IP is in charge of managing the connection and all the low level operations needed to deliver the message exchanged between the client and the server.

In this article, I won't explain how TCP/IP works, because I should write a whole article on it, but it's good to know it is the engine that allows the client and the server to have message exchanges.

HTTP is a connectionless protocol, but it doesn't mean that the client and the server don't need to establish a connection before they start to communicate with each other. But, it means that the client and the server don't need to have any prearrangement before they start to communicate.

Connectionless means the client doesn't care if the server is ready to accept a request, and on the other hand, the server doesn't care if the client is ready to get the response, but a connection is still needed.

In connection-oriented communication, the communicating peers must first establish a logical or physical data channel or connection in a dialog preceding the exchange of user data.

Now, let's see what happens when a user puts an address into the browser address bar.

• The browser breaks the URL into three parts:

o The protocol ("HTTP")

o The server name (www.Pelusoft.co.uk)

o The file name (index.html)

• The browser communicates with a name server to translate the server name "www.Pelusoft.co.uk" into an IP address, which it uses to connect to the server machine.

• The browser then forms a connection to the server at that IP address on port 80. (We'll discuss ports later in this article.)

• Following the HTTP protocol, the browser sents a GET request to the server, asking for the file "http://www.pelusoft.co.uk.com/index.htm". (Note that cookies may be sent from the browser to the server with the GET request -- see How Internet Cookies Work for details.)

• The server then sents the HTML text for the web page to the browser. Cookies may also be sent from the server to the browser in the header for the page.)

• The browser reads the HTML tags and formats the page onto your screen.

The current practice requires that the connection be established by the client prior to each request, and closed by the server after sending the response. Both clients and servers should be aware that either party may close the connection prematurely, due to user action, automated time-out, or program failure, and should handle such closing in a predictable fashion. In any case, the closing of the connection by either or both parties always terminates the current request, regardless of its status.

At this point, you should have an idea about how the HTTP - TCP/IP protocol works. Of course, there is a lot more to say, but the scope of this article is just a very high view of these protocols just to better understand all the steps that occur since the user starts to browse a web site.

Now it's time to go ahead, moving the focus on to what happens when the web server receives the request and how it can get the request itself.

As I showed earlier, a web server is a "normal computer" that is running a special software that makes it a Web Server. Let's suppose that IIS runs on our web server. From a very high view, IIS is just a process which is listening on a particular port (usually 80). Listening means it is ready to accept a connections from clients on port 80. A very important thing to remember is: IIS is not ASP.NET. This means that IIS doesn't know anything about ASP.NET; it can work by itself. We can have a web server that is hosting just HTML pages or images or any other kind of web resource. The web server, as I explained earlier, has to just return the resource the browser is asking for.

ASP.NET and IIS

The web server can also support server scripting (as ASP.NET). What I show in this paragraph is what happens on the server running ASP.NET and how IIS can "talk" with the ASP.NET engine. When we install ASP.NET on a server, the installation updates the script map of an application to the corresponding ISAPI extensions to process the request given to IIS. For example, the "aspx" extension will be mapped to aspnet_isapi.dll and hence requests for an aspx page to IIS will be given to aspnet_isapi (the ASP.NET registration can also be done using Aspnet_regiis). The script map is shown below:

The ISAPI filter is a plug-in that can access an HTTP data stream before IIS gets to see it. Without the ISAPI filter, IIS cannot redirect a request to the ASP.NET engine (in the case of a .aspx page). From a very high point of view, we can think of the ISAPI filter as a router for IIS requests: every time there is a resource requested whose file extension is present on the map table (the one shown earlier), it redirect the request to the right place. In the case of an .aspx page, it redirects the request to the .NET runtime that knows how to elaborate the request. Now, let's see how it works.

When a request comes in:

• IIS creates/runs the work processor (w3wp.exe) if it is not running.

• The aspnet_isapi.dll is hosted in the w3wp.exe process. IIS checks for the script map and routes the request to aspnet_isapi.dll.

• The request is passed to the .NET runtime that is hosted into w3wp.exe as well.

Finally, the request gets into the runtime

This paragraph focuses on how the runtime handles the request and shows all the objects involved in the process.

First of all, let's have a look at what happens when the request gets to the runtime.

• When ASP.NET receives the first request for any resource in an application, a class namedApplicationManager creates an application domain. (Application domains provide isolation between applications for global variables, and allow each application to be unloaded separately.)

• Within an application domain, an instance of the class named Hosting Environment is created, which provides access to information about the application such as the name of the folder where the application is stored.

• After the application domain has been created and the Hosting Environment object instantiated, ASP.NET creates and initializes core objects such as HttpContext, HttpRequest, and HttpResponse.

• After all core application objects have been initialized, the application is started by creating an instance of the HttpApplication class.

• If the application has a Global.asax file, ASP.NET instead creates an instance of the Global.asax class that is derived from the HttpApplication class and uses the derived class to represent the application.

Those are the first steps that happens against a client request. Most articles don't say anything about these steps. In this article, we will analyze in depth what happens at each step.

Below, you can see all the steps the request has to pass though before it is elaborated.

Application Manager

The first object we have to talk about is the Application Manager.

Application Manager is actually an object that sits on top of all running ASP.NET AppDomains, and can do things like shut them all down or check for idle status.

For example, when you change the configuration file of your web application, the Application Manager is in charge to restart the AppDomain to allow all the running application instances (your web site instance) to be created again for loading the new configuration file you may have changed.

Requests that are already in the pipeline processing will continue to run through the existing pipeline, while any new request coming in gets routed to the new AppDomain. To avoid the problem of "hung requests", ASP.NET forcefully shuts down the AppDomain after the request timeout period is up, even if requests are still pending.

Application Manager is the "manager", but the Hosting Environment contains all the "logic" to manage the application instances. It's like when you have a class that uses an interface: within the class methods, you just call the interface method. In this case, the methods are called within the Application Manager, but are executed in the Hosting Environment (let's suppose the Hosting Environment is the class that implements the interface).

At this point, you should have a question: how is it possible the Application Manager can communicate with the Hosting Environment since it lives in an AppDomain? (We said the AppDomain creates a kind of boundary around the application to isolate the application itself.) In fact, the Hosting Environment has to inherit from theMarshalByRefObject class to use Remoting to communicate with the Application Manager. The Application Manager creates a remote object (the Hosting Environment) and calls methods on it.

So we can say the Hosting Environment is the "remote interface" that is used by the Application Manager, but the code is "executed" within the Hosting Environment object.

HttpApplication

On the previous paragraph, I used the term "Application" a lot. HttpApplication is an instance of your web application. It's the object in charge to "elaborate" the request and return the response that has to be sent back to the client. An HttpApplication can elaborate only one request at a time. However, to maximize performance, HttpApplication instances might be reused for multiple requests, but it always executes one request at a time.

This simplifies application event handling because you do not need to lock non-static members in the application class when you access them. This also allows you to store request-specific data in non-static members of the application class. For example, you can define a property in the Global.asax file and assign it a request-specific value

You can't manually create an instance of HttpApplication; it is the Application Manager that is in charge to do that. You can only configure what is the maximum number of HttpApplications you want to be created by the Application Manager. There are a bunch of keys in the machine config that can affect the Application Manager behaviour:

processModel enable="true|false"

timeout="hrs:mins:secs|Infinite"

idleTimeout="hrs:mins:secs|Infinite"

shutdownTimeout="hrs:mins:secs|Infinite"

requestLimit="num|Infinite"

requestQueueLimit="num|Infinite"

restartQueueLimit="num|Infinite"

memoryLimit="percent"

webGarden="true|false"

cpuMask="num"

userName="

password="

logLevel="All|None|Errors"

clientConnectedCheck="hrs:mins:secs|Infinite"

comAuthenticationLevel="Default|None|Connect|Call|

Pkt|PktIntegrity|PktPrivacy"

comImpersonationLevel="Default|Anonymous|Identify|

Impersonate|Delegate"

responseDeadlockInterval="hrs:mins:secs|Infinite"

responseRestartDeadlockInterval="hrs:mins:secs|Infinite"

autoConfig="true|false"

maxWorkerThreads="num"

maxIoThreads="num"

minWorkerThreads="num"

minIoThreads="num"

serverErrorMessageFile=""

pingFrequency="Infinite"

pingTimeout="Infinite"

maxAppDomains="2000"

With maxWorkerThreads and minWorkerThreads, you set up the minimum and maximum number ofHttpApplications.

For more information, have a look at: ProcessModel Element.

Just to clarify what we have said until now, we can say that against a request to a WebApplication, we have:

• A Worker Process w3wp.exe is started (if it is not running).

• An instance of ApplicationManager is created.

• An ApplicationPool is created.

• An instance of a Hosting Environment is created.

• A pool of HttpAplication instances is created (defined with the machine.config).

Until now, we talked about just one WebApplication, let's say WebSite1, under IIS. What happens if we create another application under IIS for WebSite2?

• We will have the same process explained above.

• WebSite2 will be executed within the existing Worker Process w3wp.exe (where WebSite1 is running).

• The same Application Manager instance will manage WebSite2 as well. There is always an instance per Work Proces w3wp.exe.

• WebSite2 will have its own AppDomain and Hosting Environment.

It's very important to notice that each web application runs in a separate AppDomain so that if one fails or does something wrong, it won't affect the other web apps that can carry on their work. At this point, we should have another question:

What would happen if a Web Application, let's say WebSite1, does something wrong affecting the Worker Process (even if it's quite difficult)?

What if I want to recycle the application domain?

To summarize what we have said, an AppPool consists of one or more processes. Each web application that you are running consists of (usually, IIRC) an Application Domain. The issue is when you assign multiple web applications to the same AppPool, while they are separated by the Application Domain boundary, they are still in the same process (w3wp.exe). This can be less reliable/secure than using a separate AppPool for each web application. On the other hand, it can improve performance by reducing the overhead of multiple processes.

An Internet Information Services (IIS) application pool is a grouping of URLs that is routed to one or more worker processes. Because application pools define a set of web applications that share one or more worker processes, they provide a convenient way to administer a set of web sites and applications and their corresponding worker processes. Process boundaries separate each worker process; therefore, a web site or application in an application pool will not be affected by application problems in other application pools. Application pools significantly increase both the reliability and manageability of a web infrastructure.

Subscribe to:

Comments (Atom)